News

2025

Using Virtual Reality, Students Help Visualize Climate Change Solutions at Point Lookout State Park

October 7, 2025

By Joe Zimmermann, science writer for the Maryland Department of Natural Resources

University of Maryland projects highlight adaptive management to sea-level rise and other changes

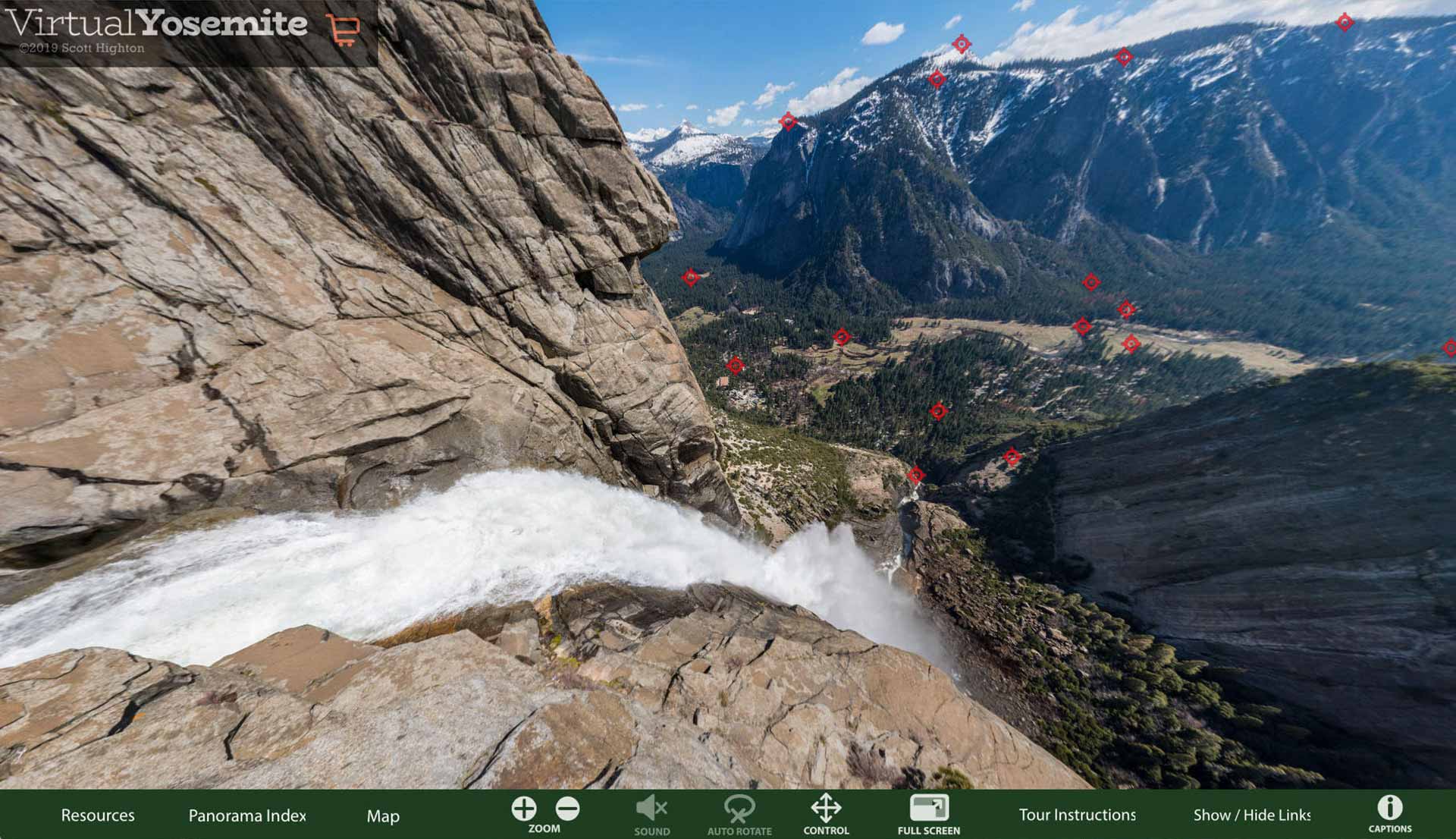

You’re on a walkway in a park. You can see trees, a road, a marsh and a coastline against a vibrant blue sky all around you.

Then, you hear the toll of a bell. The marsh expands, the water edges up the grass. Another bell and the water creeps up to the base of the roadway. Eventually, when you look down, it’s under your feet, the raised walkway that once snaked through greenery is now surrounded by water.

Each sound of the bell represents 10 years passing, allowing viewers to see the effects of climate change and rising sea levels in a virtual space all around them. What you’re seeing is part of a series of projects by landscape architecture students at the University of Maryland, College Park to use virtual reality to visualize climate change at Point Lookout State Park, as well as possible adaptations to shifting conditions.

“When you see the water come under you, and hear the bird sounds turn to wave sounds, I think it helps people understand [climate change] in a different way,” said Nico Drummond, a landscape architecture major who was part of the team that designed the project that used the tolling bell.

The work began when Maryland Department of Natural Resources staff approached the university’s Partnership for Action Learning in Sustainability program about opportunities to highlight the effects of climate change in the state.

Chris Ellis, a landscape architecture professor, said the class wanted to look at a particular state park and landed on Point Lookout State Park as a fitting site. Located at the southernmost tip of St. Mary’s County at the confluence of the Potomac River and the Chesapeake Bay, Point Lookout is susceptible to sea-level rise and other effects of a changing environment. Sea levels could rise by 1.5 to 2.5 feet at the park over the next 25 to 50 years.

The class split into groups, with each one looking at a different area of the park and how it could change in the coming decades.

“The students were looking at the park from a large scale—what are the changes going to be in terms of sea level rise,” Ellis said. “We started thinking, ‘What are the problems associated with that? And I’ll tell you, as we went through the semester, it was more like ‘What are the opportunities that we can take advantage of? Because as the change happens, there are actually some really interesting things that may come from that.”

The student groups looked at a range of solutions which both protected the park land while also offering new opportunities for recreation. Raised walkways crossed areas where wetland buffers allowed for marsh migration, and kayak trails pass by living shorelines and floating wetlands. Boardwalks contain educational panels about the helical piers that are adjustable for rising waters and oyster reefs in Lake Conoy serve as living breakwaters to protect the marsh.

The projects were focused on keeping the park adaptive and accessible to people, even under changing conditions.

“We were excited to host this virtual reality visioning project at Point Lookout,” said Ranger Jonas Williams, director of planning for the Maryland Park Service. “The students did a phenomenal job illustrating how the park may change in the future, giving park visitors a chance to see what climate change could mean for this unique and vital landscape. Projects and partnerships like this help the Park Service engage the public in understanding risks and opportunities, while guiding planning and adaptation efforts not only at Point Lookout State Park but across other at-risk parks in the years ahead.”

The projects will be viewable online on Meta Quest TV, which streams virtual reality content.

As part of the planning for the project, which took place over the spring semester, students visited Point Lookout and got to see the areas they spent the semester designing projects for. Eashana Subramanian, a landscape architecture major with a minor in sustainability studies, said she had been to the park as a child with her parents and appreciated the chance to come back and put forward ideas about the park’s future.

“It was really meaningful that I got to work on this place that I’ve visited too,” she said.

https://news.maryland.gov/dnr/2025/10/07/using-virtual-reality-students-help-visualize-climate-change-solutions-at-point-lookout-state-park/

The Effects of Warm Versus Cool Color Palettes Within Virtual Reality

Research article

First published online September 3, 2025

Ciara A. Fabian, Susannah B. F. Paletz, and Jason AstonView all authors and affiliations

Abstract

Color theory plays a crucial role in design, technology, and art. This study examined how the color groups, warm and cool, affect individuals’ emotional and physiological states as measured using the Positive and Negative Affect Schedule and heart rate monitoring. Twenty participants viewed their assigned color simulation for 10 min within a virtual reality (VR) headset. We found a significant decrease in heart rate before, during, and after VR exposure. The interaction between time and the color condition was significant, such that the decrease in heart rate was steeper for those in the cool conditions. There were no statistically significant differences in self-reported positive affect; however, there was a significant decrease in self-reported Negative Affect for both the Warm and Cool groups after the VR exposure. Understanding how colors affect users through the outcomes of this research can help designers and developers make more informed decisions in the domains of UI/UX and front-end application development.

Introduction

Color theory is essential to designing interfaces (Kimmons, 2020). Whether for products or interior design, designers start by choosing a color palette. Given the growing use of Virtual Reality (VR; Thunström et al., 2022), we tested the effects of warm versus cool colors in VR on affect and physiological outcomes. This study replicates and extends prior research by addressing current gaps in the understanding of the effects of color groups within immersive headsets.

Background

Color theory and the effects of color have been studied in multiple domains (e.g., Art Therapy, Withrow, 2004). Designers use color theory to guide users’ attention (e.g., Fialkowski & Schofield, 2024). Colors can also establish a particular ambiance, such as in horror video games, where darker palettes are frequently used to create an ominous effect (Steinhaeusser et al., 2022). In environmental psychology, researchers have found that cool colors evoke feelings of calmness compared to warm colors, which increase physiological arousal (Yildirim et al., 2011).

These findings are similar to those within UI/UX design and video game development. Cha et al. (2020) evaluated the effects of color on heart rate and emotions by presenting the colors white, green, red, and blue to participants wearing an Oculus Rift VR headset for 2 min. They found that blue and green were rated more relaxing than red, which was more arousing and unpleasant (Cha et al., 2020). However, the participants’ heart rates decreased for each color, although red had the lowest decrease of all the colors (Cha et al., 2020). Our study extends these findings to examine the effects of two broad color groups, warm and cool, on heart rate and emotions. Based on prior research on color theory’s potential psychological and physiological effects (AL-Ayash et al., 2016), we hypothesized that warm colors would increase heart rates and negative affect and decrease positive affect. We expected cool colors to decrease heart rate and negative affect while increasing positive affect.

Methods

Twenty participants were recruited from a Mid-Atlantic university and its surrounding areas. Participants were between the ages of 18 and 34 and self-reported no history of color blindness or Epilepsy. Forty-five percent were men and 55% were women. Each participant was randomly assigned to a color condition, warm or cool, with 10 participants in each condition. The five warm colors were red (HEX #f60b0e), red-orange (HEX #ee1a25), orange (HEX #f04524), yellow-orange (HEX #ffb900), and yellow (HEX #fff100). The five cool colors were violet (HEX #432885), blue-violet (HEX #091b5d), blue (HEX #0051a2), blue-green (HEX #0d98ba), and green (HEX #04724c). There was a total of 10 colors, five shown for each respective color group. The colors yellow-green and red-violet were omitted as they are crossovers of warm and cool colors. The colors were shown using an Oculus Quest 2 VR headset.

As with prior research (e.g., Liew et al., 2022), we used the Positive and Negative Affect Schedule (PANAS) to measure emotions (Watson et al., 1988). Participants filled out the PANAS-GEN scale (Watson et al., 1988), which includes 20 emotions (e.g., Distressed, Inspired, see below) on a 1 to 5 scale (1 = very slightly or not at all, 2 = a little, 3 = moderately, 4 = quite a bit, and 5 = extremely). We collected PANAS data before and after the heart rate monitor was worn, which was also before and after the VR color exposure. We collected the participants’ heart rates using an OxyU Bluetooth monitor on their left wrists. The device continuously monitored the participant’s heart rate before, during, and after the VR exposure.

During the VR color exposure, the participants wore sound-canceling Peltor headphones to limit noise distractions. Participants were also asked to remain seated and not move excessively or remove the headset unless they wanted to end testing early, as any excess motion could result in unusable data from the heart rate monitor. While participants were immersed in the Oculus Quest 2, they viewed a VR room that changed colors depending on their assigned color condition for 10 min total (as in other color studies, Litscher et al., 2013). If the participant was assigned to the Cool condition, they were shown the color variations of blue, violet, and green. If the participant was assigned to the warm condition, they were shown color variations of red, yellow, and orange. The VR color exposure was also screen-cast to an experimenter’s laptop to monitor the point of view and ensure no technical problems could affect the manipulation.

After the VR color exposure, participants’ noise-canceling headphones and Oculus Quest 2 were removed. Heart rate was monitored for an additional 2 min to get a final reading and test for any changes after the 10-min VR color exposure. After removing the OxyU monitor, participants filled out the PANAS again. To analyze the changes in heart rate for each participant, the average was calculated for the conditions before, during, and after the VR color exposure. To aggregate the participant’s PANAS results, the 20 emotions were split into two groups. Positive Affect was the sum of Interested, Excited, Strong, Enthusiastic, Proud, Alert, Inspired, Determined, Attentive, and Active, and Negative Affect was the sum of Distressed, Upset, Guilty, Scared, Hostile, Irritable, Ashamed, Nervous, Jittery, and Afraid (Watson et al., 1988). The Positive and Negative PANAS subscales could range from 10 to 50. Repeated Measures ANOVAs were used to test the effects of time and the two color conditions on heart rate and the two PANAS subscales.

Outcome

There was a significant difference in the average participant heart rate before, during, and after being in the VR color exposure (p = .004), such that heart rate decreased (Table 1, Figure 1, error bars are means of standard errors). On average, participants in the cool color condition started with a higher heart rate, but their heart rates overall were not significantly higher than those in the warm condition (Table 1). However, the interaction between time and the color condition was significant, such that the decrease in heart rate was steeper for those in the cool condition (p = .002, Table 1). Post-hoc pairwise comparisons with a Bonferroni adjustment suggested this interaction was driven by differences between Before and After, t(18) = 5.22, p < .001, and between the During and After within the Cool conditions, t(18) = 3.48, p = .04. There were no significant differences in Positive Affect (Table 1). However, there was a significant decrease in self-reported Negative Affect for both the Warm and Cool groups after the VR color exposure (p < .001; Table 1 and Figure 2).

Conclusion

Given the importance of color in design and the increasing attention to VR, we examined the effects of warm versus cool colors in VR on heart rate and affect. Our findings partially support our hypotheses: specifically, exposure to cool colors in VR may decrease heart rate and Negative Affect. However, we found no effects for either color group on Positive Affect, and the warm color exposure also decreased self-reported Negative Affect. These results may be because the VR color exposure, which involved sitting quietly for 10 min while watching colors, could have calmed the participants in general (e.g., lowered distress, irritability, nervousness). In future research, we could test different tasks during the VR color exposure. We also recommend drawing on emotion theories such as Core Affect and the Theory of Constructed Emotion to compare deactivating and activating emotions (e.g., Feldman Barrett & Russell, 1998; Russell, 2003) by using the PANAS-X (Watson & Clark, 1994). Future research could also collect a larger sample to be more robust against potential confounds of individual differences in initial heart rates across conditions (Figure 1).

These findings can be used to advance research in understanding how to create better designs in VR and in other fields. Knowing the effects of color groups can allow designers to build more meaningful products geared toward their users. As a result of this study being conducted in VR, it can allow those who build simulations to know the effect of colors and to build impactful scenes (i.e., a blue and green zen garden for a calming effect). These findings can also be applied to other domains such as Art Therapy (Withrow, 2004), where knowing how colors may be perceived can assist therapists in having a more effective understanding of why their clients may choose to use specific color palettes. Lastly, a better understanding of the effects of color could also lead to more ethical designs (e.g., avoiding colors that induce arousal/stress), as understanding how some colors impact users allows designers to limit or choose these colors in their products, creating better user experiences and outcomes.

Acknowledgments

We are grateful for helpful comments on an earlier draft from Letitia Robinson and three anonymous reviewers.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the Research Improvement Grant program to the first author from the University of Maryland College of Information.

ORCID iDs

Ciara A. Fabian https://orcid.org/0009-0008-0621-5180

Susannah B. F. Paletz https://orcid.org/0000-0002-9513-2799

References

AL-Ayash A., Kane R. T., Smith D., Green-Armytage P. (2016). The influence of color on student emotion, heart rate, and performance in learning environments. Color Research and Application, 41(2), 196–205. https://doi.org/10.1002/col.21949

https://journals.sagepub.com/doi/10.1177/10711813251367738

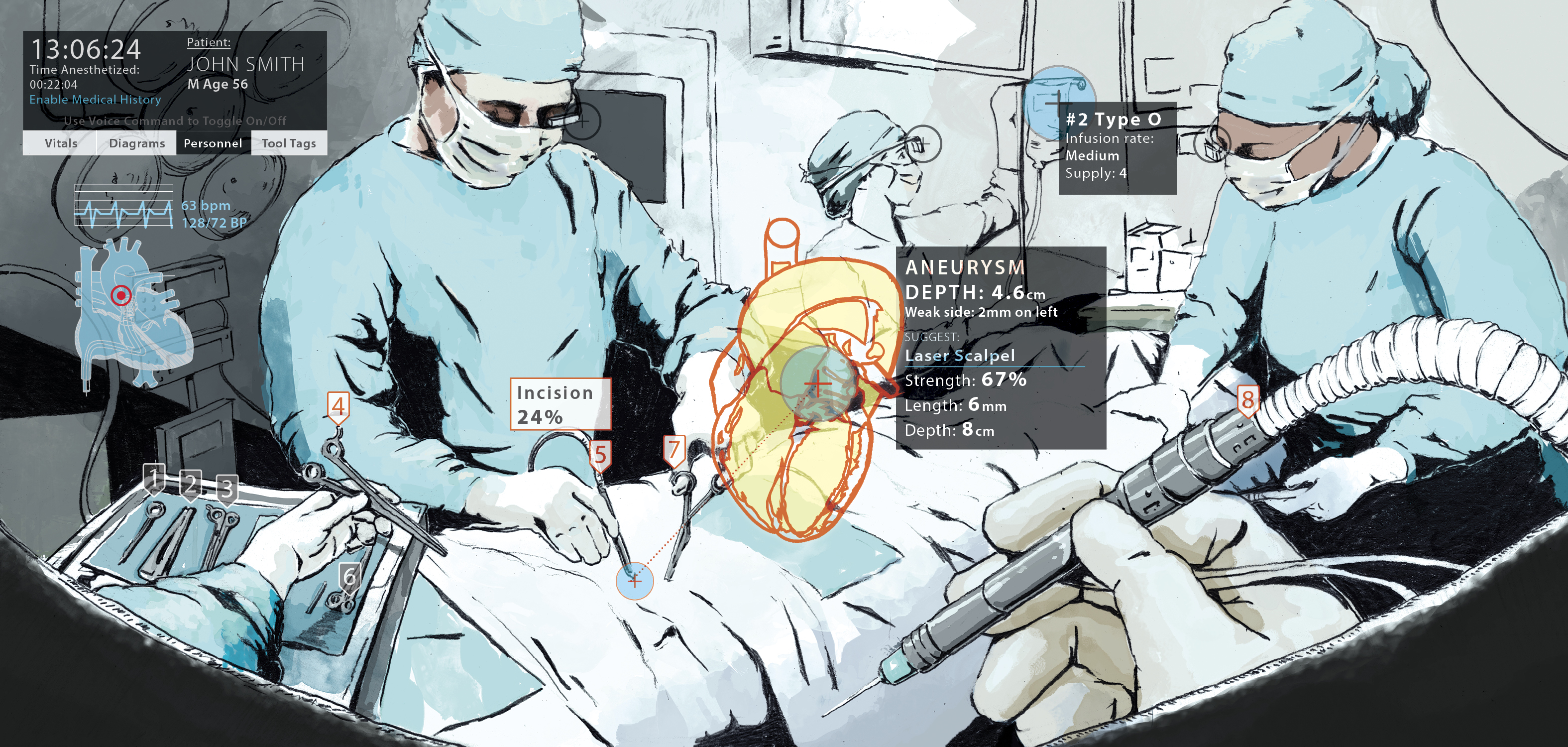

Medical Training—From Every Angle

Cutting-Edge VR Camera System Focuses on New Educational Possibilities

April 10, 2025

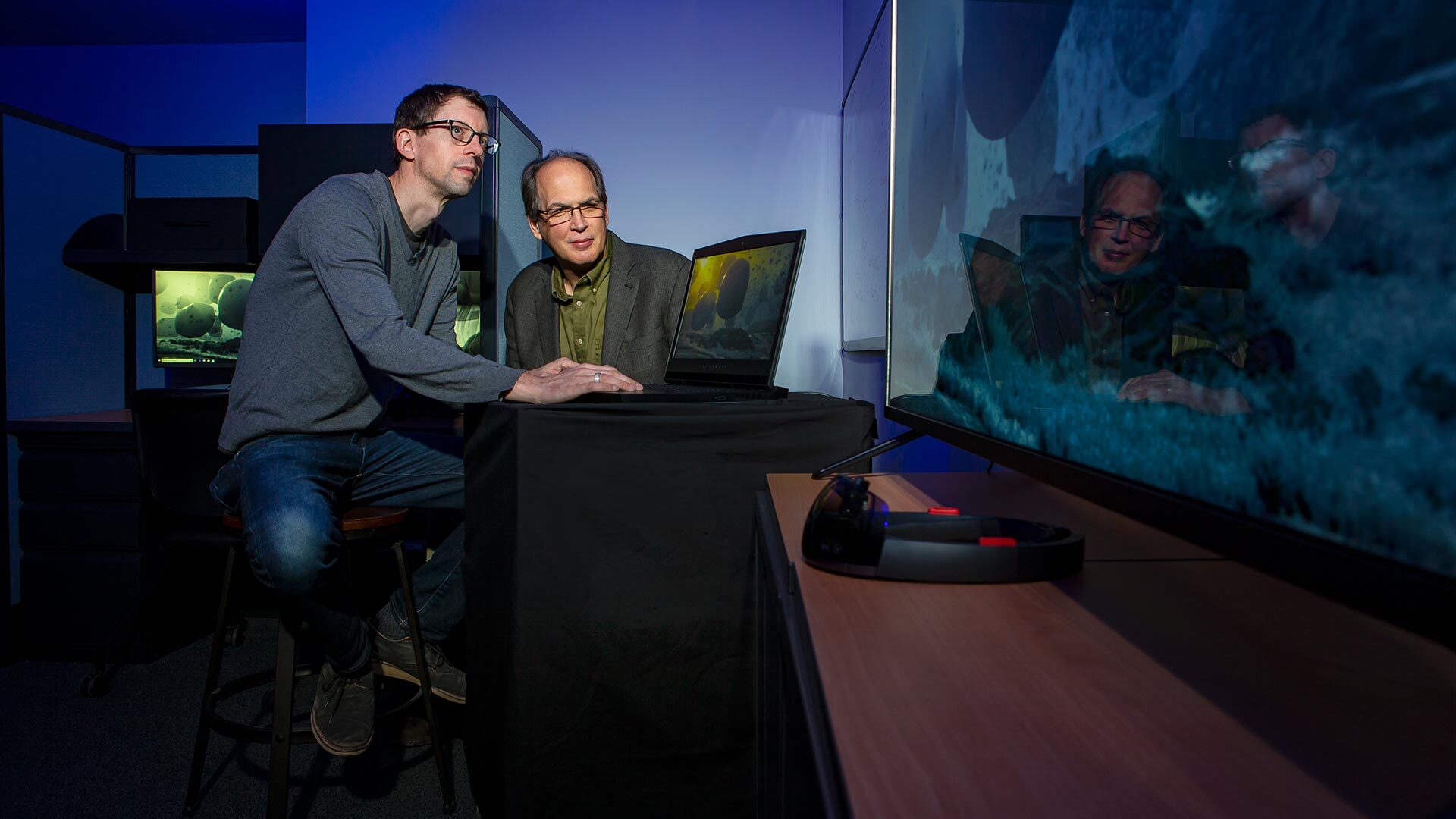

An advanced camera system developed at the University of Maryland, College Park is enabling futuristic teaching and learning methods for physician assistant students at the University of Maryland, Baltimore.

Called the HoloCamera, the device uses 300 imaging devices to create virtual reality (VR) training scenarios depicting treatment that students can “step into” and view the procedure from all angles. Funded by the National Science Foundation and led by computer science Professor Amitabh Varshney, dean of the College of Computer, Mathematical, and Natural Sciences, the project was forged through the University of Maryland Strategic Partnership: MPowering the State, a collaboration between UMCP and UMB to leverage the strengths of each institution.

Join Varshney, UMB Physician Assistant Program Director and Associate Professor Cheri Hendrix and their students taking the next step in medical training in the latest installment of “Enterprise: University of Maryland Research Stories.”

https://today.umd.edu/medical-training-from-every-angle

A New Generation of Doctoral Scholars Goes Beyond the Page

By Jessica Weiss ’05

April 07, 2025

From video games to VR, ARHU doctoral students are expanding dissertation scholarship through digital and experimental work.

When American studies doctoral student Christin Washington set out to explore themes of memory and spirituality, she knew a traditional, text-based dissertation wouldn’t suffice. To fully capture the lived experiences, spaces and rituals of Black women’s African-derived spiritual practices—like Obeah, Voodoo, Christianity and Rootwork—she needed to create something immersive.

Of the four chapters of her dissertation, which she will defend next year, one will be a 3D digital recreation of her grandmother’s house in Guyana, designed to be experienced in virtual or augmented reality. With additional sensory elements like sound and smell, she aims to transport her committee into the experience of Nine-Night—a Caribbean funerary tradition in which loved ones gather for nine nights to honor the deceased.

A Legacy of Innovation at UMD

While the traditional, chapter-based dissertation remains a cornerstone of humanities scholarship, a growing number of doctoral students in the College of Arts and Humanities (ARHU) are exploring new formats—video games, immersive websites, handmade objects, public humanities projects—to expand how research is created and shared. Advances in digital technology, along with increasing faculty support and a range of university resources—including specialized programs, funding, and dedicated spaces for creative and technical experimentation—are making it possible for students to integrate experimental approaches alongside traditional academic work.

“It’s sometimes hard for students to see this as a possibility since they’re still so surrounded by traditional academic culture,” said Matthew Kirschenbaum, Distinguished University Professor of English. “They don’t realize a dissertation can be something else entirely, and that this actually has a somewhat longer history than one might think.”

Kirschenbaum was an early pioneer. His 1999 dissertation at the University of Virginia, “Lines for a Virtual T[y/o]pography,” was one of the first-ever electronic humanities dissertations. After joining UMD in 2001, he helped shape MITH—the Maryland Institute for Technology in the Humanities—into a hub for experimental digital humanities (DH) scholarship. MITH, located in Hornbake Library, provides students with the technical and theoretical support needed to explore digital methods, from text analysis to data visualization.

A decade later, Kirschenbaum would begin to mentor another pioneering digital scholar at UMD: Amanda Visconti Ph.D. ’15. Through their dissertation project, “Infinite Ulysses,” a participatory digital edition of “Ulysses,” users could highlight, annotate and interact with James Joyce’s famously difficult novel, creating a collaborative reading experience. Visconti then used coding, user testing and web design as core research methods of their dissertation, rather than mere supplements to a written document. The dissertation won the Graduate School’s 2016 Distinguished Dissertation Award.

“Dr. Visconti’s work challenged the boundaries of what a humanities dissertation could be,” Kirschenbaum said. “All of the challenges involved were intellectually exciting; Dr. Visconti really ended up teaching the committee a lot along the way.”

Now director of UVA Libraries’ Scholars’ Lab, Visconti has since consulted dozens of students pursuing digital dissertation work. Visconti emphasizes the importance of being surrounded by faculty and peers, as well as courses and spaces like MITH, to support and validate non-traditional scholarship.

“Being at MITH and working with DH scholars meant I got to see what good scholarship in the field could look like,” Visconti said. “It's enormously helpful to have mentors and a community who accept your work as valid scholarship.”

Pushing the Boundaries of Scholarship

In working to recreate her grandmother’s house, Washington is collaborating with faculty across multiple disciplines—including American studies, English, women’s studies, immersive media design (IMD), geographical information sciences and historic preservation. Last summer, with funding from a 2024 Caribbean Digital Scholarship Collective grant, she traveled to Guyana with Assistant Clinical Professor Stefan Woehlke from the Historic Preservation Program to collect data and images of her grandmother’s house. Now based at AADHum and NarraSpaceXR, two campus makerspaces for digital experimentation and storytelling, she is using tools like virtual reality headsets, holographic displays and high-performance computing to create a sensory, immersive experience of the house—one that will be presented to her committee alongside traditional written chapters.

“So much of this work is about finding the right form—and sometimes, that means creating it from scratch,” she said. “My committee has been incredibly open to helping me develop a format that fully articulates the lived experience of Black women.”

Other students are also pushing the boundaries of dissertation formats. Lisa Abena Osei, a doctoral student in English, is designing an interactive game that immerses players in Afrofuturist and Africanfuturist worlds, inviting them to engage with alternative histories, technologies and narratives. (Afrofuturism broadly imagines Black futures through a diasporic lens, while Africanfuturism remains rooted in African cultures and histories.) Himadri Agarwal, another doctoral student in English, is also making a game as part of her project, using it to examine digital gaming and reparative game design practices. She will present a physical installation that people can interact with.

Not all experimental dissertations are digital. Nat McGartland, a doctoral student in English, is integrating textile art into her dissertation on visual media and data representation, crocheting data visualizations to accompany each chapter. The crocheted pieces serve to represent McGartland’s argument that human decision-making always infiltrates the collection, processing and presentation of data. Meanwhile, American studies doctoral candidate Kristy Li Puma is producing public events and digital humanities projects alongside the community members she interviews for her monograph dissertation on the history, politics and cultural practices of D.C.’s alternative and underground communities.

Preparing for the Future

Beyond redefining academic research and creative scholarship, Marisa Parham, professor of English, associate director of MITH and director of AADHum and NarraSpaceXR, said these projects are shaping the future of academic hiring, publishing and public engagement. For many students, the dissertation isn’t just a requirement—it’s a testing ground for the kind of work they may do beyond the Ph.D., whether in academia or beyond. And by working in non-traditional formats, students are developing a range of skills that go beyond traditional research and writing.

When working on digital projects, students are “working to collect hardware, navigating software licenses, even hiring people,” Parham said. “This means that working on the dissertation requires acquiring or honing management, planning and/or design skills that are clearly transferable, while also becoming a skilled researcher and thinker.”

Digital and experimental dissertations also have the potential to reach broader audiences. Unlike traditional monographs, which are typically read by a small group of scholars, these projects invite public engagement. That means students must learn how to communicate their work both to specialized academic communities and to non-experts—an essential skill for careers in and beyond academia.

For Washington, that means rethinking narratives about how Black women’s lives are understood and represented. She hopes to open up the digital elements of her dissertation to the public for viewing—allowing others to step inside it.

“My ultimate goal is for people to continue to understand the intimate and interior lives of Black women,” she said. “I’m hoping to contribute a piece of artwork and scholarship that does some of that work.”

Photos by Lisa Helfert.

https://arhu.umd.edu/news/new-generation-doctoral-scholars-goes-beyond-page

A New Reality for Physician Assistant Students

January 31, 2025 | By Alex Likowski

“Today is gonna be a really, really fun day for you,” Assistant Dean for Physician Assistant Education and Associate Professor Cheri Hendrix, DHEd, MSBME, PA-C, DFAAPA, told her class of 58 physician assistant (PA) students. In truth, it would be hard to say just who would have the most fun, the students who sat in anticipation of an exciting new high-tech learning experience, or Hendrix, who waited a very long time for this day to arrive.

“I think this is really going to enrich what you know about neurologic disease,” she continued, understating her expectations a bit. For the first time, this learning module made use of virtual reality (VR) technology, offering her entire didactic year cohort an immersive experience. With the use of VR headsets, the students would be virtually in the room with Hendrix as she examined a standardized patient — a professional actor posing as a patient who was presenting with signs of distress. The plan was to give the students a brief knowledge test before their VR experience, then another test afterward to see how much this experience improved their understanding.

Student Lauren Makarehchi was among the first in the group to experience the virtual exam.

“I feel like I’m actually there, even though I can’t see my hands or anything. But it’s like, I feel like I’m very immersed in the experience,” she said with her headset still on.

Not only could Makarehchi see the exam, but she also could move around the room and observe provider and patient from every angle. Additionally, as the patient described her experiences for Hendrix – all classic symptoms of a stroke – an animated graphical overlay allowed Makarehchi and the other students to see what was going on inside the patient’s body, including the nervous and cardiovascular systems.

“I can see that the clot started in her atrium, went up to the left side of her brain, and now you can see the impulses are being limited on the right side of her body, which is causing the motor and sensory limitations that we can see on the exam,” said student Meg Rice. After her five-minute virtual experience, Rice seemed impressed.

“It’s a total game-changer to kind of be able to be in the room with them, seeing what’s going on in real time, basically as she does the exam and asks the different questions. And you know how what’s going on inside of her body is influencing her answers and her symptoms and her signs all in real time. So, that’s really cool,” she said. “When I get to clinicals next year, if I have a patient with a similar situation, I can kind of have this picture in my brain of what might be going on.”

Every student appeared to have a similar impression, and most were quick to see the impact VR training might have on their ability to retain what they learn.

“I think this kind of experience will change the way you look at patients for sure, because now I’m able to remember seeing inside and what’s happening,” said student Simone Hill. “It’s one thing to see someone presenting physically with their complaints, but when you have experiences like this, you’re able to remember: OK, I remember seeing what this looks like from the inside. I remember seeing which part of the brain was impacted by this and where the blockages were and where the vessels were flowing through.”

Classmate Eugene Obeng-Appiah agreed. “If I see a patient who presents with certain symptoms, I can go back to that memory that I have of what I saw. And so I think that would allow me to be able to put pieces together a little bit more and faster as well.”

The ability to retain and recall a huge and wide array of information is precisely what Hendrix and her colleagues are trying to gauge.

“We have 24 months to get 3½ years of medical school in them,” Hendrix said. “How do you do that so that the understanding is there, the knowledge is there? And the application of that, you’ve got to apply these medical concepts. You can’t memorize this.”

In those 24 months, PA students must master topics such as anatomy, clinical practice, diagnostic tests, and disease over many disciplines, including family medicine, internal medicine, pediatric medicine, women’s health, psychiatric medicine, behavioral health, emergency medicine, and surgery.

To fill that very tall order, Hendrix can rely on her skilled team at the University of Maryland School of Graduate Studies (UMSGS). But to step up the impact of their training, to help students get to what she calls “a-ha!” moments faster, Hendrix wanted students in the classroom to get as close as possible to the real thing with virtual reality. For that, she called in another team, this one from the University of Maryland, College Park’s (UMCP) College of Computer, Mathematical, and Natural Sciences. Dean Amitabh Varshney, PhD, is a pioneer in the application of high-performance computing and visualization in engineering, science, and medicine.

“Oh, that’s a marriage made in heaven. Boy, Dr. Amitabh Varshney, best buddy. I love him!” Hendrix gushed. Just before Hendrix had her first chance encounter with her College Park comrade, Varshney had just completed building the largest volumetric capture studio in the world, funded by a $1 million grant from the National Science Foundation. Called HoloCamera, the studio employs AI-driven techniques to fuse and reconstruct dynamic visual data captured simultaneously from 300 cameras. Varshney’s team integrated anatomically accurate animations, precisely aligned with the dynamic hologram models, to enhance the student learning experience.

“I’ve got some ideas I want to use,” Hendrix said, recalling their conversation. “I want to see inside a patient. I want to see inside a mannequin. I want to use holographic technology to demonstrate to the students the pathophysiologic mechanism of diseases. They said, ‘Great.’ ”

Varshney’s team now works with Hendrix’s PA program under the aegis of the University of Maryland Institute for Health Computing, a collaboration supported by the University of Maryland Strategic Partnership: MPowering the State (MPower). MPower is a collaboration between the state of Maryland’s two most powerful public research engines — the University of Maryland, Baltimore (UMB) and UMCP — to strengthen and serve the state of Maryland and its citizens.

So far, only the stroke patient scenario has been produced for use in the classroom, but Hendrix is optimistic about producing more videos and introducing more technology, such as the ability to not just see and hear, but also to touch and feel.

Enter Dixie Pennington, MS, CHSE, CHSOS, simulation director for the UMSGS PA program. Her job is to think ahead of the technology but also find ways to work in the cutting edge of what’s available right now, such as haptic gloves.

“Haptic is related to a sensation where you can touch and feel and you can actually get feedback that you are touching something,” she said. “If you’re immersed into some sort of ARV [artificial reality video] or setting, you don’t know that you’re touching an avatar or something like that. But if you wore haptic gloves, you would know. You’d have that sensation, that feedback that would come back to your hand, which makes it that much more real.”

The students are thinking ahead, too. Inspired by a dermatology PA who treated her when she was 13, student Emily Lamb has her sights set on following the same path, but maybe with a boost from technology. “If there was a way to use virtual reality so where you can see different skin lesions, see the depth on different skin tones and different ages, even pediatric to geriatric, the skin is so different, it changes. It’s actually the largest organ in our body,” she said. “So, to be able to study it in a more in-depth and realistic way, it would completely change the field.”

Bottom line, Hendrix said the day exceeded her expectations. “I knew they would like it, but I didn’t know how much they would absolutely love it. And many of the students came out saying, ‘Can I just learn every condition this way?’”

https://www.umaryland.edu/news/archived-news/january-2025/a-new-reality-for-physician-assistant-students.php

Tech Talk: Virtual Reality healthcare training at University of Maryland

by: Tosin Fakile

Posted: May 7, 2025 / 04:08 PM EDT

Updated: May 7, 2025 / 04:11 PM EDT

COLLEGE PARK, Md. (DC News Now) — The University of Maryland, College Park campus is using a 3D virtual human avatar to diagnose patients in a new medical training virtual reality scenario.

“About five years ago, we had this vision to create cameras that could create 3D, virtual, avatars of humans that were cinematically real, very high fidelity,” Professor Amitabh Varshney said.

That resulted in the creation of the Holocamera; Cinematic Avatar Imaging Studio.

“Instead of a camera person, for instance, in a movie, the director directs how the user will experience a scene. We are now able to allow users to directly figure out how they would like to immerse and see, experience a particular environment,” Varshney said.

That full immersion happens at the volumetric capture facility at UMD’s College of Computer, Mathematical and Natural Sciences.

“It has 300 cameras. Each camera is capturing information at 4k resolution, 300 frames a second. So overall, we are getting 100 billion samples per second in this facility,” Varshney said.

It’s designed for high-precision learning and simulation.

Varshney said a few years ago, they carried out a study that showed users can recall things much better if they experience it in an embodied fashion in a VR environment than on a desktop.

“If you look at the practice of surgery as an example, you have situations where no more than a small handful of residents, or interns, or healthcare professionals, students can participate and see the surgery,” Varshney said. “We would be able to allow students to experience and stand in the shoes of the surgeon, add their own. Well, further, it is scalable.”

It took a few years and a few versions to get to this point, and there’s more still planned for the Holocamera.

“One of the other things that we are using this facility for is to understand how artificial intelligence can be used to generate. So, we will capture one set of actions in this facility, and can we then use generative AI to generate other actions and other modalities from this facility? So that we can try a lot of what-if scenarios, in an interactive manner,” Varshney said. “How can we use this as a tool to train the next generation of computer scientists, artificial intelligence scientists and engineers, and technologists in the underlying technology for this?”

https://www.dcnewsnow.com/tech-talk/tech-talk-virtual-reality-healthcare-training-at-university-of-maryland/

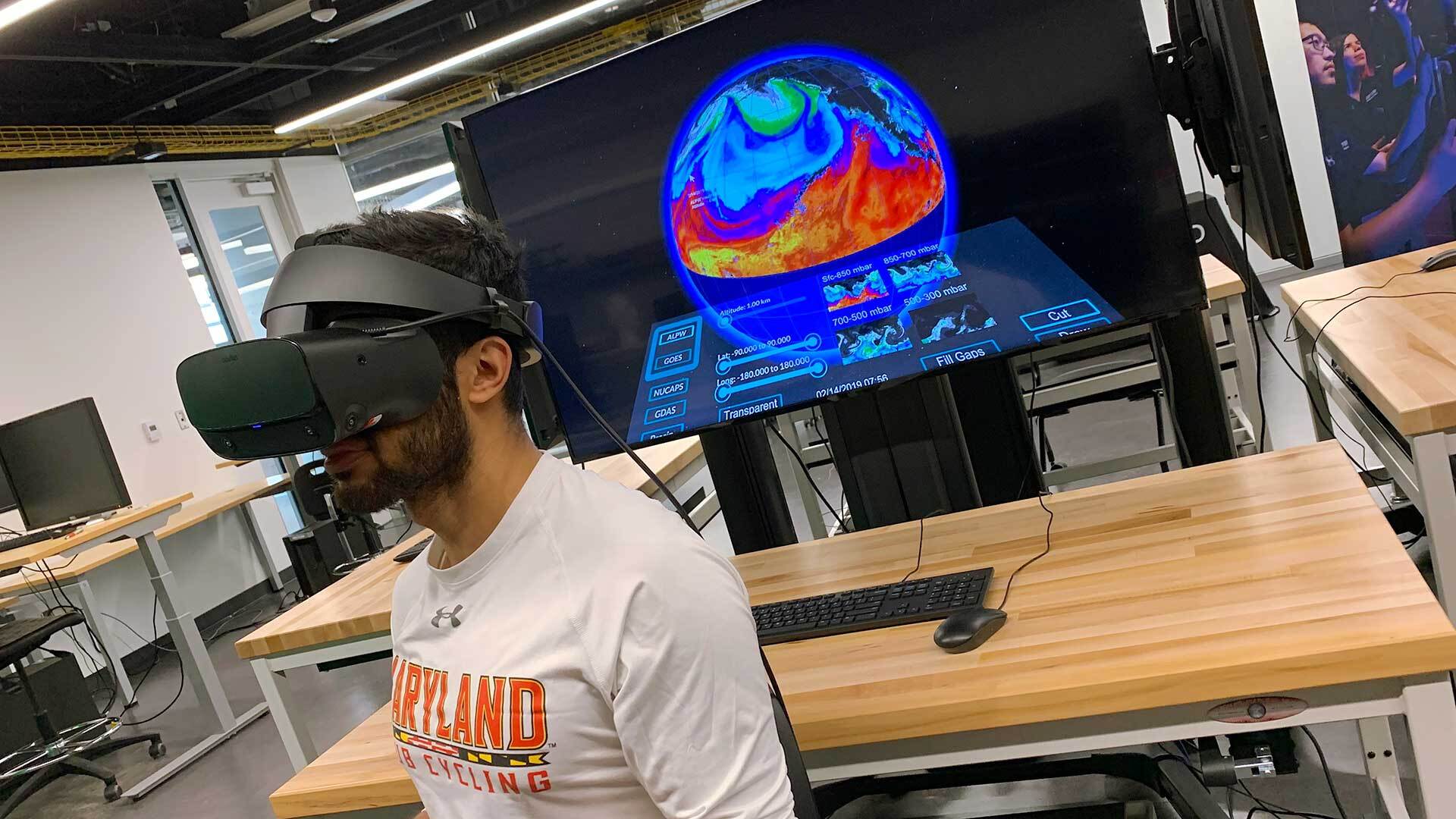

A ‘Wave’ of New Understanding About Earth’s Oceans and Atmosphere

Faculty, Students Collaborate With NASA on Interactive Installation at Kennedy Center

Apr 01, 2025

On one towering screen, monochrome clouds whoosh by as if the viewer is watching them from above. On the next screen, a range of blues and greens on a section of ocean represents the levels of carbon and chlorophyll present. On a third, a rainbow effect on the outer edges of an image of Earth shows how far various wavelengths can penetrate the planet’s surface.

These screens and the scenes they show make up “Wave: From Space to Ocean,” a project led by a team of University of Maryland faculty members and students working with NASA scientists and counterparts from the University of North Texas. Together, they’re turning data from a satellite into an interactive, digestible visual experience for the public. The project is on view at the Kennedy Center through April 13 as part of the “Earth to Space: Arts Breaking the Sky” festival.

The PACE (Plankton, Aerosol, Cloud, Ocean Ecosystem) satellite, which launched on Feb. 8, 2024, is collecting critical information about ocean health and air quality by measuring the distribution of phytoplankton—microscopic plants and algae that provide food for lots of aquatic creatures while producing much of the planet’s oxygen—and by monitoring atmospheric variables associated with air quality.

“The satellite is able to look at our globe in ways that have never been done before,” said Ian McDermott ’12, the immersive media technician for UMD’s Immersive Media Design (IMD) program and one of the leaders of the Wave project. IMD is affiliated with UMD’s Arts for All initiative, which identifies and supports creative ways to combine the university’s strengths in the arts, sciences and technology to advance social justice, build community and develop collaborative, people-centered solutions to grand challenges. Arts for All also helped fund the initial project through a Spring 2024 ArtsAMP Grant. “It’s able to take photos and absorb data that uses colors that are beyond our eyes’ capacity to see. We’re making that data engaging to the general public.”

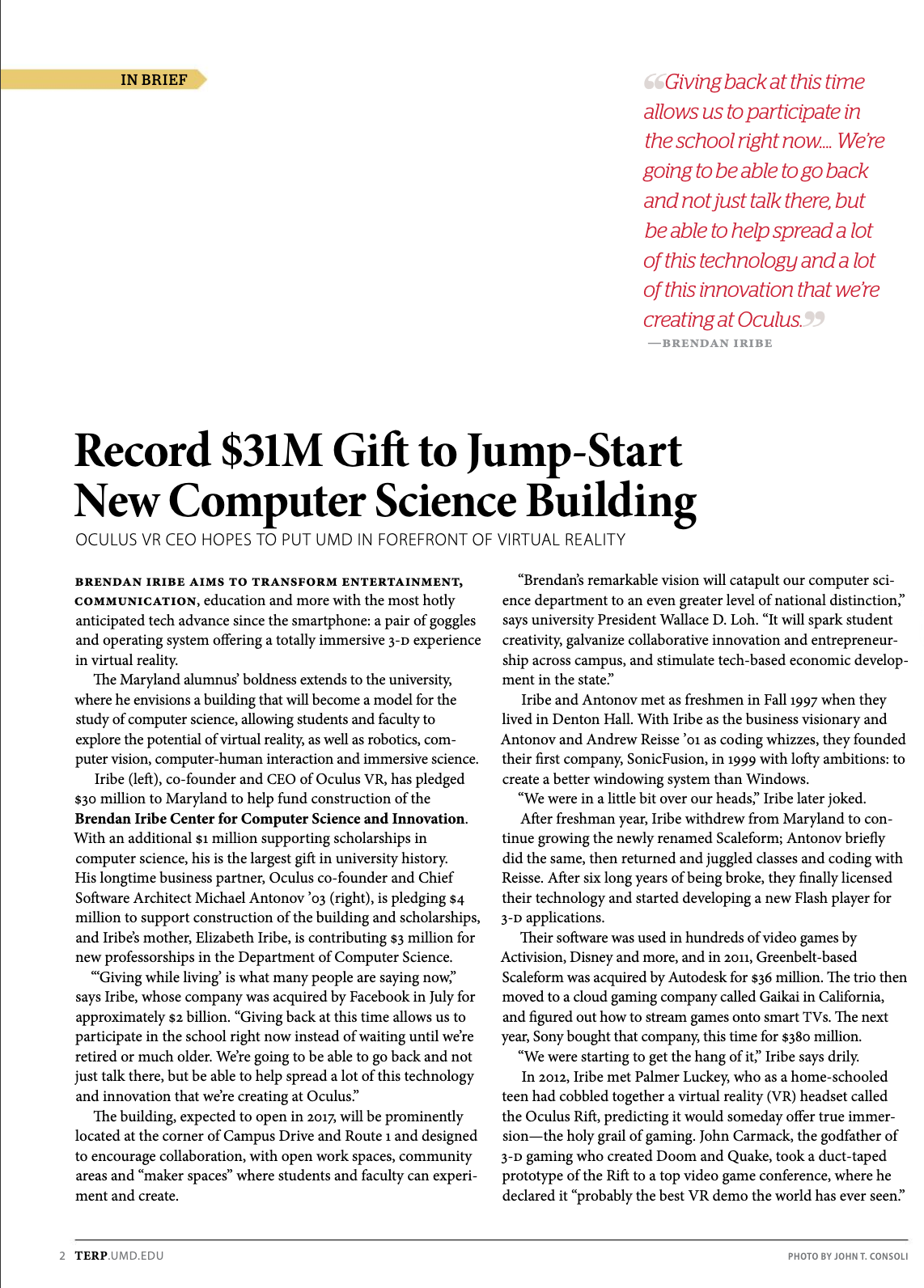

“Wave” is a roughly 15-minute animation projected on screens 16 feet tall. Motion capture cameras track guests’ bodies and movements; by waving their hands or making other motions, visitors can reveal data about phytoplankton levels, turn the globe to see a different angle of the atmosphere’s wavelengths, or watch what a phytoplankton bloom (a rapid increase in the population of the photosynthetic plankton) looks like. They can track images of clouds, captured by an instrument called HARP2 that uses 60 cameras at one time to take images; the results show their sizes and classifications, gleaning information like where lack of rain clouds might mean drought.

The project allows guests to “become proactive researchers and discover new information, new answers and new questions,” said Myungin Lee, a lecturer in the Department of Computer Science and a leader on “Wave” with Mollye Bendell, assistant professor of art. (Sam Crawford, sound and media technologist in the School of Theatre, Dance, and Performance Studies and co-director of the Maya Brin Institute for New Performance, also worked on “Wave.”)

The installation is an example of how UMD’s faculty members are developing innovative new ways of teaching and learning. “This artwork involves programming, data processing and visualization, user interface design, 3D modeling and scientific simulation,” said Lee. “The students are getting unique opportunities to be involved in such a large-scale project.”

Andrei Davydov ’25, a student in the IMD program, worked on 3D modeling for the project, sharpening his skills in coding. “It was a fun animation challenge to have the planktons both work as a group of 200, while at the same time making them have their own individual personalities,” he said.

The Wave team members hope that those who interact with the installation will walk away with a deepened appreciation for the intricate ecosystems that inhabit our planet’s oceans and atmosphere. “I want them to be mesmerized and taken by awe by the big scale of the exhibition, and also connect with how our oceans are doing,” said Yooeun Lee ’26, an IMD student. “I hope people will appreciate nature and continue to bring more recognition to it.”

https://today.umd.edu/a-wave-of-new-understanding-about-earths-oceans-and-atmosphere

Creating Wearable Devices for Body-to-Body Communication

March 6, 2025

Jun Nishida’s Embodied Dynamics Laboratory explores the dynamics of our physical skills and interactions.

In his Embodied Dynamics Laboratory, University of Maryland Computer Science and Immersive Media Design Assistant Professor Jun Nishida creates wearable devices that allow our bodies to communicate and measure our skills and embodied knowledge.

From interactive exoskeletons that share finger dexterity skills from one person to another, to a virtual reality system that allows adults to experience the world from a 5-year-old’s perspective, Nishida’s devices aim to better understand the dynamics of our physical experiences, perceptions and interactions. The goal of Nishida’s research: to establish body communication and explore how computer technology can improve our overall wellbeing.

Watch Wearable Devices for Body-to-Body Communication on YouTube

https://cmns.umd.edu/news-events/news/jun-nishida-embodied-dynamics-laboratory-wearable-technology

Companies Face Double-Edged Reality of Immersive Tech in Foreign Markets

Business Researcher Offers Tips on Tailoring AR, VR Use Around the World

Global businesses are embracing augmented reality (AR) and virtual reality (VR) to give potential customers a feel for their products before buying, whether virtually trying on a lipstick shade, exploring a brand’s world in Roblox or

Global businesses are embracing augmented reality (AR) and virtual reality (VR) to give potential customers a feel for their products before buying, whether virtually trying on a lipstick shade, exploring a brand’s world in Roblox or seeing how a new couch might look in your living room.

But getting it right in the virtual world doesn’t mean the same thing in every global market, a University of Maryland marketing professor found in new research.

It’s well established that multinational companies often struggle to compete against local businesses in overseas markets—a concept known as Liability of Foreignness (LOF)—and it turns out that the same disadvantages can apply to immersive technologies intended to break down traditional barriers, according to a University of Maryland-led study published last month in the Journal of International Business Studies.

Culture influences how people interact with so-called extended reality (XR) technologies, which makes it tough for multinational companies to have an XR approach that works well in every country, said lead researcher P.K. Kannan, Dean's Chair in Marketing Science at the Robert H. Smith School of Business.

“If you’re a firm coming into a local market, you have the liability of being a foreigner because you really don’t understand the cultural nuances and you might not be able to clearly communicate your value proposition in the way that the local culture will understand,” he said. “Basically what we are saying is don’t just take XR that has been developed for one market and just immediately assume it will work the same way in another market.”

Kannan and co-authors Hyoryung Nam Ph.D. ’12, now at Syracuse University, and Yiling Li and Jeonghye Choi at Yonsei University in Seoul, Korea, investigated XR marketing strategy in tech-savvy South Korea. “They are at the cutting edge of these applications in retail,” Kannan said. “They have many locally based companies, as well as foreign companies coming into market products.”

They teamed up with a market research company to study 257 beauty brands in South Korea over a three-year period from 2019-22, particularly how XR innovations affected brand engagement with foreign brands versus local brands.

They focused on “brand buzz”—how often a brand gets mentioned on social media, a key indicator of brand engagement. The team confirmed that LOF does exist in the virtual world for foreign firms, due to cultural mismatches in how people process information.

“If your XR is not really resonating with consumers, there won’t generate much brand engagement,” Kannan said.

The problems were especially apparent in cases where the XR applications created highly interactive, extremely vivid but less realistic experiences. For example, there’s a greater risk of cultural mismatch interacting with products in a fully imaginary, stylized virtual world than with a straightforward application that uses a person’s own image to try on makeup shades or fashion accessories.

But a very new brand or one introducing a new product is less likely to face LOF, Kannan said. “The uncertainty about something new takes over, making people more focused on experiencing the new brand or product rather than noticing cultural mismatches.”

The researchers also found that companies can avoid cultural mismatch problems through strategic marketing investments in local markets. Brands that have their own platforms that allow direct connections with local customers are far less likely to experience LOF.

Kannan offered the following recommendations for companies seeking to use XR to connect with customers in foreign markets:

1. Know the culture. “Understand the cultural norms and nuances of a new market before you enter it. Tailor your XR strategy to fit the local culture, ensuring it feels relevant and engaging for local consumers.”

2. Choose XR technology wisely: “Some XR technologies can be more challenging for foreign businesses. Highly interactive and imaginative XR, which often use more advanced technology, may increase the risk of cultural mismatches. If you’re not very familiar with the local market, start with simpler XR features and gradually introduce more advanced ones.”

3. Leverage newness: “For multinational companies, when you’re a new brand or introducing a new product, that comes with a unique advantage—people are more focused on experiencing something new. This gives you some room to introduce more advanced XR in foreign markets.”

4. Build a community: “Ensure that you get onto your own platform and start building brand communities around your product. That will help you in the long run to reduce the impact of the liability of foreignness.”

In general, having XR is better than not having it, said Kannan. But adopting it comes with risks. If you come up with the wrong technology and the wrong approach, it can harm your brand — sometimes even worse than not having XR at all.

“We find that companies that use XR generally perform better than companies that don’t use these technologies. But if you use it in the wrong way, it can harm your brand,” he said.

https://today.umd.edu/companies-face-double-edged-reality-of-immersive-tech-in-foreign-markets

New UMD Research Brings Smarter, More Subtle Assistance to AR Glasses

UMD Ph.D. student Geonsun Lee and Google researcher Ruofei Du developed Sensible Agent, a framework that allows AR glasses to anticipate user needs using gaze and gesture cues.

October 7, 2025

Recent innovations, such as Google's Project Astra, exemplify the potential of proactive agents embedded in augmented reality (AR) glasses to offer intelligent assistance that anticipates user needs and seamlessly integrates into everyday life. These agents promise remarkable convenience, from effortlessly navigating unfamiliar transit hubs to discreetly offering timely suggestions in crowded spaces. Yet, today’s agents remain constrained by a significant limitation: they predominantly rely on explicit verbal commands from users. This requirement can be awkward or disruptive in social environments, cognitively taxing in time-sensitive scenarios, or simply impractical.

To address these challenges, we introduce Sensible Agent, published at UIST 2025, a framework designed for unobtrusive interaction with proactive AR agents. Led by Ruofei Du, Interactive Perception & Graphics Lead at Google, and Geonsun Lee, a University of Maryland Department of Computer Science Ph.D. student and Google XR Student Researcher advised by Distinguished University Professor Dinesh Manocha, the project represents a collaboration bridging cutting-edge academic research and industry innovation.

Sensible Agent builds on the team’s prior research in Human I/O and fundamentally reshapes interaction by anticipating user intentions and determining the best approach to deliver assistance. It leverages real-time multimodal context sensing, subtle gestures, gaze input, and minimal visual cues to offer unobtrusive, contextually appropriate assistance—marking a crucial step toward truly integrated, socially aware AR systems that respect user context, minimize cognitive disruption, and make proactive digital assistance practical for daily life.

Click HERE to read the full article.

https://www.cs.umd.edu/article/2025/10/new-umd-research-brings-smarter-more-subtle-assistance-ar-glasses

UMD's Immersive Media Design Program Bridges Art and Technology

A Q&A with Roger Eastman on the program's vision, opportunities and impact.

February 05, 2025

The rapid integration of technology into everyday life has brought new possibilities for creative expression and innovation. One area seeing significant advancement is immersive media, which engages viewers with sight, sound and other sensory experiences, encouraging interaction with digital environments. The University of Maryland’s Department of Computer Science offers a program dedicated to this field: Immersive Media Design (IMD).

Founded to meet the the growing demand for interdisciplinary expertise, the IMD program provides undergraduates with the tools to explore emerging technologies like virtual, augmented and extended reality. Students can pursue either a Bachelor of Arts in Emerging Creatives or a Bachelor of Science in Computing. Both tracks are designed to foster collaboration across disciplines and prepare students for careers at the intersection of art and technology. With more than 130 students, 20 staff and faculty and newly hired Assistant Director Georgia Creson, the program is ready to expand its significance.

In a Q&A, Professor of Computer Science Roger Eastman, director of the IMD program, shares insights on its evolution, goals and impact.

Q: What inspired the creation of the Immersive Media Design Program at UMD?

A: The Immersive Media Design program was initiated by a task force created by the Provost in 2016. The task force explored opportunities for new degrees centered around emerging technologies like virtual, augmented and extended reality. Their recommendation led to creating a program designed to blend technical and artistic disciplines into immersive media education.

Q: How does the program combine the fields of computer science and the arts to offer a unique educational experience?

A: The program leverages the strengths of the nationally ranked Department of Computer Science and the Department of Art. Students choose between the Bachelor of Arts, which emphasizes art courses, and the Bachelor of Science, which emphasizes computer science courses. Regardless of their choice, all students take core courses in immersive media. These courses teach them to program new media, integrate it into exhibitions, games, or installations, and creatively creatively apply emerging technologies.

Q: What skills and competencies do students develop through the program?

A: We categorize the skills into three main areas. First is creating digital and physical assets, such as 3D models, video, audio files and installations. Second is the use of media engines like Unity, Isadora or Touch Designer to combine assets interactively. Third is design, which involves conceptualizing, prototyping and refining immersive experiences. We aim to equip students to design, populate and execute their projects while fostering collaboration and adaptability.

Q: How has the IMD Program evolved since its inception, and what milestones has it achieved?

A: The program graduated its first class of 37 students in spring 2024. Enrollment has grown steadily, with about 130 to 140 students currently enrolled, halfway to our goal of 260. We’ve established dedicated labs for photogrammetry, motion capture XR development, and spaces for students to exhibit their work. Additionally, we’ve fostered partnerships with corporations and institutions to provide students with exposure and opportunities beyond the classroom.

Q: How does the program integrate cutting-edge technologies and trends into its curriculum?

A: Students have access to labs equipped for photogrammetry, sound recording, motion capture and VR/AR development. We also provide flexible design studios and projection spaces to support their work. These facilities align with our focus on collaborative design, asset creation, and interactive deployment. By combining resources from the computer science and art departments resources, we ensure that students gain comprehensive hands-on experience with current tools and technologies.

Q: What challenges has the program faced in fostering collaboration between technical and creative disciplines?

A: One challenge is ensuring students with different skill sets can collaborate effectively. For instance, in a studio project, an art student might create paintings while a computer science student programs a game that integrates those paintings. Both students must develop overlapping skills in coding, design and asset creation to collaborate successfully. This holistic approach ensures that all students learn the foundational competencies of immersive media design.

Q: What are your future goals for the program, and how do you see it growing or expanding in the coming years?

A: One priority is strengthening career development and advising to help students identify job opportunities aligned with their skill sets. We also aim to expand research and creative opportunities, offering students more ways to engage in both technical innovation and artistic expression. Whether through traditional internships or campus-based projects, we want to help students build robust portfolios that showcase their abilities.

Q: How do you see immersive media shaping industries beyond entertainment, such as education, healthcare, or social advocacy?

A: Immersive media extends beyond gaming and entertainment into areas like education, healthcare and industrial design. For example, students can create virtual exhibits for museums, develop medical visualization tools or design engineering simulations. The field is constantly evolving, and our graduates are prepared to adapt to new applications, whether in training, visualization or interactive storytelling.

—Story by Samuel Malede Zewdu, CS Communications

###

For more information on the Immersive Media Design program, including curriculum details, faculty research and student projects, visit imd.umd.edu or contact the program directly at gcreson@umd.edu.

The Department welcomes comments, suggestions and corrections. Send email to editor@cs.umd.edu.

https://www.cs.umd.edu/article/2025/02/umds-immersive-media-design-program-bridges-art-and-technology

Step Into NarraSpace: UMD’s Hub for Immersive Storytelling and Inclusive Scholarship

With VR headsets and tactile tools, UMD's new lab is redefining what scholarship can look—and feel—like.

By Jessica Weiss ’05

What does it mean to truly feel the complexity of a human experience—like the layered emotions of diaspora or the unspoken nuances of code-switching? These are the questions being explored at NarraSpace, a new immersive storytelling lab at the University of Maryland.

Located within the Maryland Institute for Technology in the Humanities (MITH), NarraSpace invites scholars and creators—especially BIPOC and queer voices—to explore deeply personal, often indescribable experiences. With tools like virtual reality headsets, spatial reality displays and gaming equipment, the lab is helping scholars push the boundaries of what storytelling—and scholarship—can be.

"We're really asking: What does it take to bring the nuances of human experience into a tangible form?” said Professor of English Marisa Parham, who received a 2024 Teaching Innovation Grant to create the lab. “It might be a sound, a smell or even the feel of something in your hand. These tools allow us to describe, investigate and express stories that are often difficult to talk about outside of literature, music and film.”

Parham stressed the importance of the non-digital tools in the space; NarraSpace features tactile materials like Play-Doh, rocks and kinetic sand that she said can help scholars find new creative outlets for their ideas.

Elizabeth “Lisa” Abena Osei, a doctoral student in English whose research focuses on Black speculative fiction, specifically Afrofuturism and Africanfuturism, is a graduate researcher at NarraSpace. After learning the open-source software Twine through mentorship with Parham, Osei is designing a game that immerses players in Afrofuturist and Africanfuturist worlds, allowing them to experience unique cultures, technologies and narratives.

“I never anticipated taking a digital route with my work, but I truly love it,” Osei said. “There's so much that humanities students can do with their ideas, their stories and their research.”

Osei’s work reflects the lab’s mission to amplify stories that often go unheard in traditional scholarship. At NarraSpace, she has found mentorship and collaboration, presenting her progress to peers and faculty who’ve provided valuable feedback.

Among other current student projects: Christin Washington, a doctoral candidate in American studies, is using “speculative mapping” to look at Caribbean women’s lives in the context of mourning, space and digital infrastructure; and Alice Bi, a graduate student in English, combines the study of poetic form in African American and Asian American texts with touch technologies like interactive projection. Parham, meanwhile, is using the space to model how immersive technologies might work alongside more traditional scholarship.

Beyond individual projects, NarraSpace is fostering community and innovation. This semester, students in Parham’s “Black Digitalities” class will engage with the lab, and next month, artist and creative technologist Ari Melenciano ’14 will lead a workshop on the poetics of motion capture.

“We want to get people thinking with their hands in a comfortable setting with other scholars and artists,” Parham said. “That’s how we ideate.”

*****

Faculty, staff and undergraduate students interested in learning more should reach out to Cassandra Hradil, assistant director of NarraSpace. Graduate students should reach out to Marisa Parham.

Photo by Lisa Helfert.

https://arhu.umd.edu/news/step-narraspace-umds-hub-immersive-storytelling-and-inclusive-scholarship

2024

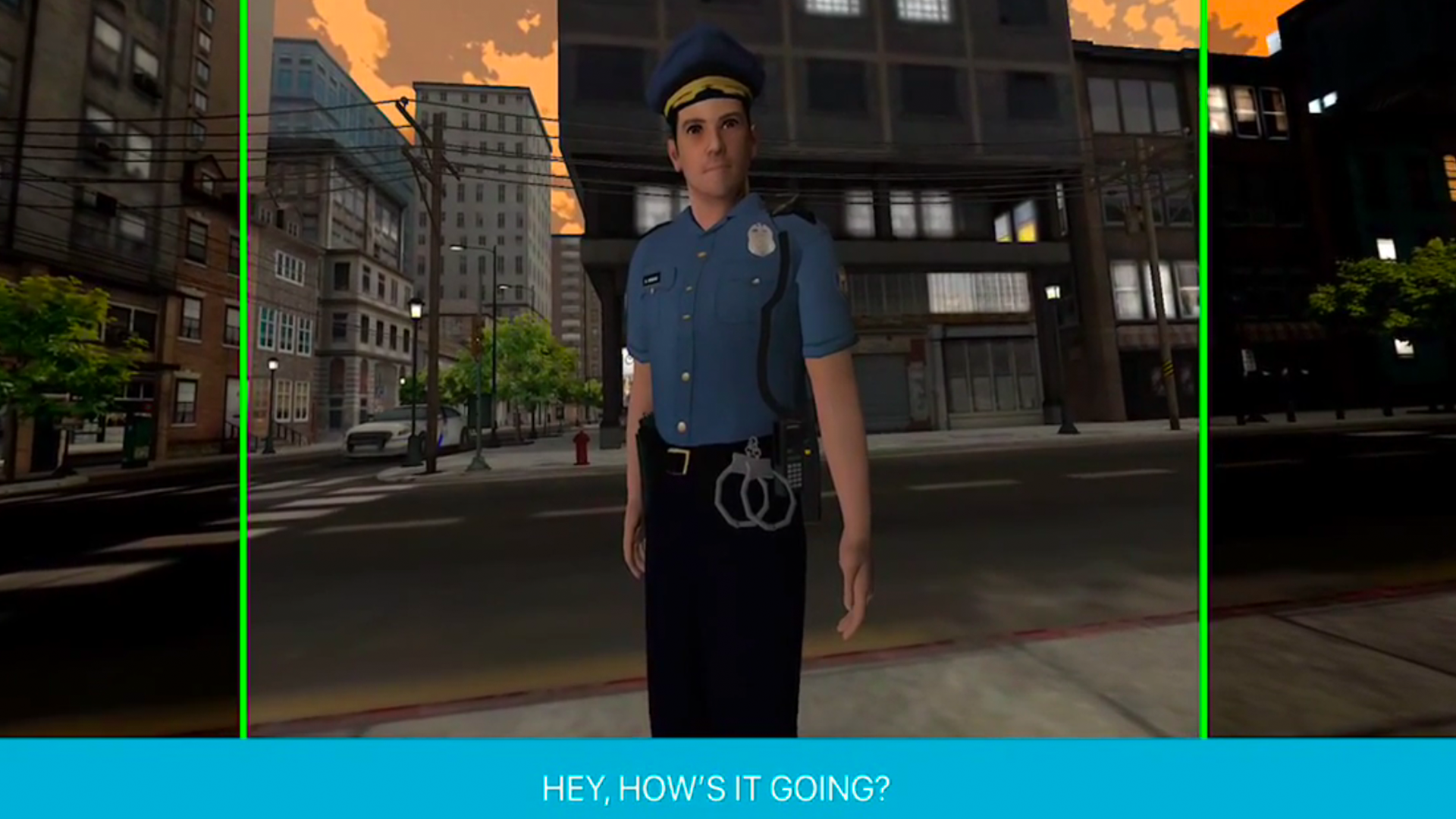

VR Can Boost Police Officer Empathy, UMD Study Finds

Officers Immersed in Simulated Confrontations Felt More Connection to People in Mental Health Crisis

By John Tucker

The job of police officer isn’t one that’s generally associated with touchy-feeliness, but a new University of Maryland study points to a tool that could help cops better connect with those they serve: virtual reality (VR).

Police officers who donned VR headsets to train for engagement with civilians suffering mental health crises reported feeling high levels of empathy, said Jesse Saginor, associate professor in UMD’s School of Architecture, Planning and Preservation and coauthor of the study, published this month in Criminal Behaviour and Mental Health.

“The more immersed they felt, the more they developed an emotional understanding of what the non-player character in crisis was going through,” Saginor said.

Police are often the first contact for people suffering a psychiatric emergency, and studies have shown that a quarter of people with mental illness have an arrest history. Many officers report that they aren’t adequately trained to respond to mental health crises, leading some to use more force than necessary.

By tapping into their emotional intelligence in deeper ways, officers can handle such situations more beneficially for everyone, Saginor said.

An expert in quantitative methodologies, Saginor began collaborating on this study with lead author Florida Atlantic University criminologist Lisa M. Dario, an expert on police technology and community policing, while he worked there. For the experiment, the pair recruited 40 Florida officers, about half of whom had no experience with VR. After donning headsets, participants confronted a virtual civilian showing signs of schizophrenic psychosis, portrayed in a video by a virtual actor. After the simulation, officers completed surveys measuring immersion and empathy levels, among other things.

One of the more surprising findings was that officers who showed initial confusion about the virtual environment—mainly due to a lack of familiarity with the technology—were more likely to exhibit empathy toward the character in mental crisis.

“When respondents feel unsettled, they might focus more on understanding the characters, potentially as a coping mechanism to make sense of the virtual experience,” the authors wrote.

Saginor called the takeaway “one of the fascinating findings in terms of how officers experienced the environment. They’re trying to understand exactly what’s happening, what the character is feeling and what course of action they should take. They were highly immersed.”

Previous research has shown the benefits of VR training for law enforcement officers, but the UMD-Florida Atlantic University study is the first to gauge officers’ opinions and emotional reactions to it, Saginor said.

“Perspective-taking fosters empathy,” Dario said. “VR training enables officers to experience situations from the citizen’s viewpoint. When police respond to psychiatric and behavioral health crises, they often encounter chaotic environments with limited information. By recreating these unpredictable interactions, officers can practice strategic responses in ways that weren’t previously possible.”

The study raises questions about whether virtual experiences elicit compassion more effectively than traditional live-action training. Notably, VR simulations present officers with one-on-one scenarios, whereas live-action sessions typically involve groups of officers, which might limit empathic reactions, Saginor said.

The experiment reinforces the finding that VR can be a crucial training tool for law enforcement, provided that police officers find it realistic.

“As technology gets better, VR might further maximize immersion and sense of involvement, which could strengthen empathy and improve police-community interactions,” Saginor said.

The job of police officer isn’t one that’s generally associated with touchy-feeliness, but a new University of Maryland study points to a tool that could help cops better connect with those they serve: virtual reality (VR).

Police officers who donned VR headsets to train for engagement with civilians suffering mental health crises reported feeling high levels of empathy, said Jesse Saginor, associate professor in UMD’s School of Architecture, Planning and Preservation and coauthor of the study, published this month in Criminal Behaviour and Mental Health.

“The more immersed they felt, the more they developed an emotional understanding of what the non-player character in crisis was going through,” Saginor said.

Police are often the first contact for people suffering a psychiatric emergency, and studies have shown that a quarter of people with mental illness have an arrest history. Many officers report that they aren’t adequately trained to respond to mental health crises, leading some to use more force than necessary.

By tapping into their emotional intelligence in deeper ways, officers can handle such situations more beneficially for everyone, Saginor said.

An expert in quantitative methodologies, Saginor began collaborating on this study with lead author Florida Atlantic University criminologist Lisa M. Dario, an expert on police technology and community policing, while he worked there. For the experiment, the pair recruited 40 Florida officers, about half of whom had no experience with VR. After donning headsets, participants confronted a virtual civilian showing signs of schizophrenic psychosis, portrayed in a video by a virtual actor. After the simulation, officers completed surveys measuring immersion and empathy levels, among other things.

One of the more surprising findings was that officers who showed initial confusion about the virtual environment—mainly due to a lack of familiarity with the technology—were more likely to exhibit empathy toward the character in mental crisis.

“When respondents feel unsettled, they might focus more on understanding the characters, potentially as a coping mechanism to make sense of the virtual experience,” the authors wrote.

Saginor called the takeaway “one of the fascinating findings in terms of how officers experienced the environment. They’re trying to understand exactly what’s happening, what the character is feeling and what course of action they should take. They were highly immersed.”

Previous research has shown the benefits of VR training for law enforcement officers, but the UMD-Florida Atlantic University study is the first to gauge officers’ opinions and emotional reactions to it, Saginor said.

“Perspective-taking fosters empathy,” Dario said. “VR training enables officers to experience situations from the citizen’s viewpoint. When police respond to psychiatric and behavioral health crises, they often encounter chaotic environments with limited information. By recreating these unpredictable interactions, officers can practice strategic responses in ways that weren’t previously possible.”

The study raises questions about whether virtual experiences elicit compassion more effectively than traditional live-action training. Notably, VR simulations present officers with one-on-one scenarios, whereas live-action sessions typically involve groups of officers, which might limit empathic reactions, Saginor said.

The experiment reinforces the finding that VR can be a crucial training tool for law enforcement, provided that police officers find it realistic.

“As technology gets better, VR might further maximize immersion and sense of involvement, which could strengthen empathy and improve police-community interactions,” Saginor said.

The job of police officer isn’t one that’s generally associated with touchy-feeliness, but a new University of Maryland study points to a tool that could help cops better connect with those they serve: virtual reality (VR).

Police officers who donned VR headsets to train for engagement with civilians suffering mental health crises reported feeling high levels of empathy, said Jesse Saginor, associate professor in UMD’s School of Architecture, Planning and Preservation and coauthor of the study, published this month in Criminal Behaviour and Mental Health.

“The more immersed they felt, the more they developed an emotional understanding of what the non-player character in crisis was going through,” Saginor said.

Police are often the first contact for people suffering a psychiatric emergency, and studies have shown that a quarter of people with mental illness have an arrest history. Many officers report that they aren’t adequately trained to respond to mental health crises, leading some to use more force than necessary.

By tapping into their emotional intelligence in deeper ways, officers can handle such situations more beneficially for everyone, Saginor said.

An expert in quantitative methodologies, Saginor began collaborating on this study with lead author Florida Atlantic University criminologist Lisa M. Dario, an expert on police technology and community policing, while he worked there. For the experiment, the pair recruited 40 Florida officers, about half of whom had no experience with VR. After donning headsets, participants confronted a virtual civilian showing signs of schizophrenic psychosis, portrayed in a video by a virtual actor. After the simulation, officers completed surveys measuring immersion and empathy levels, among other things.

One of the more surprising findings was that officers who showed initial confusion about the virtual environment—mainly due to a lack of familiarity with the technology—were more likely to exhibit empathy toward the character in mental crisis.

“When respondents feel unsettled, they might focus more on understanding the characters, potentially as a coping mechanism to make sense of the virtual experience,” the authors wrote.

Saginor called the takeaway “one of the fascinating findings in terms of how officers experienced the environment. They’re trying to understand exactly what’s happening, what the character is feeling and what course of action they should take. They were highly immersed.”

Previous research has shown the benefits of VR training for law enforcement officers, but the UMD-Florida Atlantic University study is the first to gauge officers’ opinions and emotional reactions to it, Saginor said.

“Perspective-taking fosters empathy,” Dario said. “VR training enables officers to experience situations from the citizen’s viewpoint. When police respond to psychiatric and behavioral health crises, they often encounter chaotic environments with limited information. By recreating these unpredictable interactions, officers can practice strategic responses in ways that weren’t previously possible.”

The study raises questions about whether virtual experiences elicit compassion more effectively than traditional live-action training. Notably, VR simulations present officers with one-on-one scenarios, whereas live-action sessions typically involve groups of officers, which might limit empathic reactions, Saginor said.

The experiment reinforces the finding that VR can be a crucial training tool for law enforcement, provided that police officers find it realistic.

“As technology gets better, VR might further maximize immersion and sense of involvement, which could strengthen empathy and improve police-community interactions,” Saginor said.

https://today.umd.edu/vr-can-boost-police-officer-empathy-umd-study-finds

Haptic Hardware Offers Waterfall of Immersive Experience

‘Sensational’ New Gaming Tech Could Someday Aid Blind Users

Increasingly sophisticated computer graphics and spatial 3D sound are combining to make the virtual world of games bigger, badder and more beautiful than ever. And beyond sight and sound, haptic technology can create a sense of touch—including vibrations in your gaming chair from an explosion, or difficulty turning the wheel as you steer your F1 racecar through a turn because of g-forces.

While all this typically relies on force feedback using mechanical devices, University of Maryland researchers are now offering a new take that delivers lifelike haptic experiences with controlled water jets. But don’t worry—you and your surroundings will stay dry.

The team presented a paper and demoed its new waterjet-based technology known as JetUnit at the ACM Symposium on User Interface Software and Technology last month in Pittsburgh.

In addition to its use in gaming, the system might one day aid blind users by providing force feedback cues for spatial navigation and other interactions, thus enhancing accessibility, said project leader Zining Zhang, a computer science doctoral student in the Small Artifacts (SMART) Lab.

The UMD device offers a wide range of haptic experiences, from subtle sensations that mimic a gentle touch to sharp pokes that feel like a needle injection.

User testing has demonstrated that JetUnit is successful at creating diverse haptic experiences within a virtual reality setting, with participants reporting a heightened sense of realism and engagement. Compared to other available haptic technologies, the system can create an unusually broad range of forces based on changes in water pressure.

The key is a thin, watertight membrane made of nitrile—the same synthetic rubber used for sterile gloves—attached to a compact, self-contained chamber that effectively isolates the water from the user. And to reduce water turbulence within the membrane, the team 3D-printed several internal devices to allow strong forces without any leakage.

Zhang said the UMD team decided to try using contained water jets due to water’s efficiency in energy transfer as an incompressible fluid. It also presented the challenge, though, of keeping users dry—a significant focus of work as the team developed the system, she said.

Zhang said she received significant feedback as the project progressed from her adviser, Huaishu Peng, an assistant professor of computer science who is director of the SMART Lab.

Other help on the project came from Jun Nishida, an assistant professor of computer science whose research involves wearable technologies for cognitive enhancement.

Both Peng and Nishida have appointments in the University of Maryland Institute for Advanced Computer Studies, which Zhang credits with providing a “rich, collaborative environment” for the team to do their work.

The Singh Sandbox makerspace and its manager, Gordon Crago, also assisted, providing an array of tools and lighting hardware as the team was developing the JetUnit prototype.

Looking ahead, Zhang envisions integrating thermal feedback via multi-level water temperature control and expanding JetUnit to become a full-body haptic system, paving the way for even more “sensational” uses.

Zhang said the UMD team decided to try using contained water jets due to water’s efficiency in energy transfer as an incompressible fluid. It also presented the challenge, though, of keeping users dry—a significant focus of work as the team developed the system, she said.

Zhang said she received significant feedback as the project progressed from her adviser, Huaishu Peng, an assistant professor of computer science who is director of the SMART Lab.

Other help on the project came from Jun Nishida, an assistant professor of computer science whose research involves wearable technologies for cognitive enhancement.

Both Peng and Nishida have appointments in the University of Maryland Institute for Advanced Computer Studies, which Zhang credits with providing a “rich, collaborative environment” for the team to do their work.

The Singh Sandbox makerspace and its manager, Gordon Crago, also assisted, providing an array of tools and lighting hardware as the team was developing the JetUnit prototype.

Looking ahead, Zhang envisions integrating thermal feedback via multi-level water temperature control and expanding JetUnit to become a full-body haptic system, paving the way for even more “sensational” uses.

https://today.umd.edu/haptic-hardware-offers-waterfall-of-immersive-experience

Medical Training Comes to Life in UMD’s VR Simulation

Physician Assistant Program at UMB Will Introduce Technology for Stroke Assessment

By John Tucker

October 02, 2024

The 76-year-old patient in the doctor’s office suddenly becomes mute and can’t move her right arm—classic symptoms of a stroke.

Cheri Hendrix, director of the physician assistant (PA) program at the University of Maryland, Baltimore goes into assessment mode, her voice growing urgent. “Tell me what day it is,” she says as she checks for a heart rate. “Tell me where you are.”

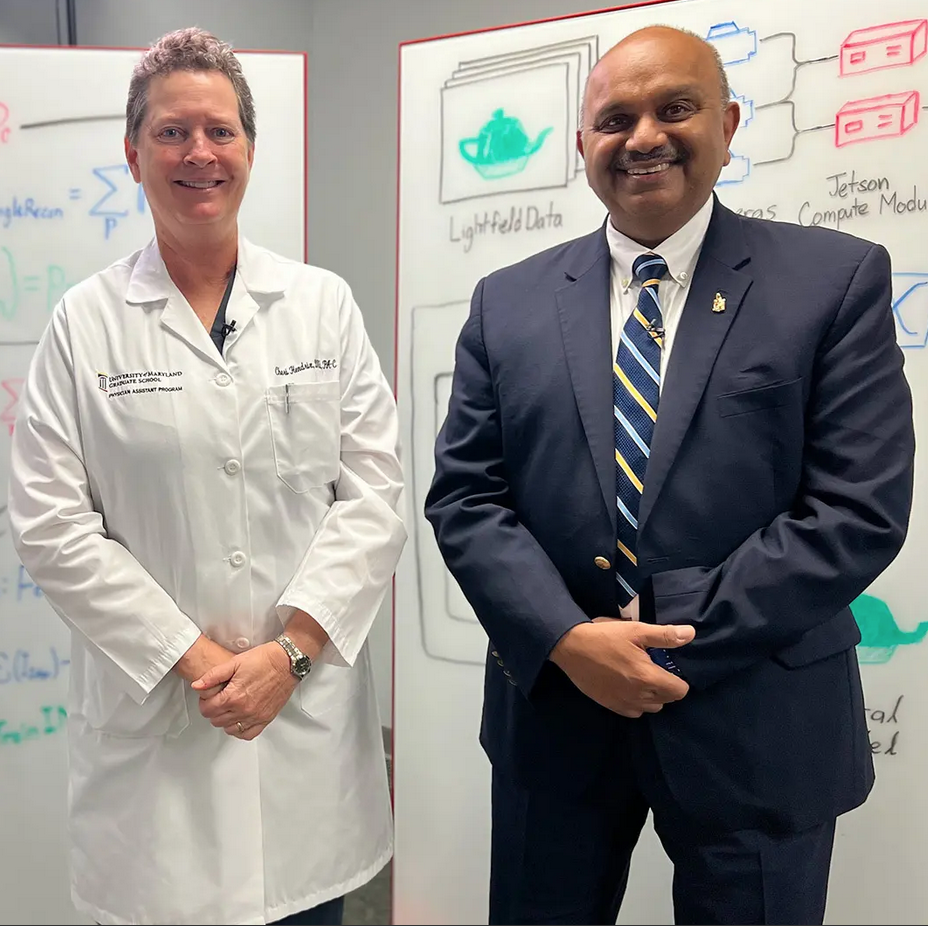

The scene isn’t playing out in real life. It’s a 3-D training simulation representing a common stroke scenario. Soon, PA program students outfitted with virtual reality (VR) goggles will be able to immerse themselves in the experience, watching Hendrix evaluate the patient as if they were with her, seeing what Hendrix sees and even “walking around” in virtual space to view the exam from different vantages.

The imaging facility used to create the scene, called the HoloCamera, was built by University of Maryland, College Park computer visualization experts in response to studies showing that people retain more information in immersive training environments. The 3-D medical training simulation project using the HoloCamera is a partnership between the UMD and UMB campuses.

“Sitting still and using a desktop and mouse doesn’t aid in forming memories as well as experiencing things in an embodied context,” said Amitabh Varshney, a professor of computer science, dean of UMD’s College of Computer, Mathematical, and Natural Sciences and lead investigator of the project. “Immersive interactivity is more educationally engaging than watching a video or flipping through a book.”

In September, Varshney’s team unveiled the technology to a pair of PA students. At the HoloCamera studio in College Park, second-year clinical student Ashlee Leshinski donned goggles and started moving her head from side to side. A wall monitor captured her fluid view as she observed Hendrix assessing the patient, a professional actor recruited for the project.

“Oh, wow, that’s super cool,” a grinning Leshinski said as she moved around the room and looked down, as if perched above the patient’s chair. “You can see her perspective and all the angles. It’s so clear, too.”