Video Library

IMD@UMD Workshop 2021

Watch all 15 virtual presentations given by UMD faculty and students. Below are the descriptions of each presentation.

Workshop with opening remarks from Roger Eastman, Director of Immersive Media Design; Amitabh Varshney, Professor and Dean of the College of Computer, Mathematical, and Natural Sciences (CMNS); and Bonnie Thornton Dill, Professor and Dean of the College of Arts and Humanities (ARHU). Watch the Opening Remarks.

The School of Theater, Dance and Performance Studies looks to create a game-driven training space for theatre students. Andrew Robert Cissna is a visiting assistant professor in the graduate lighting design program at the School of Theatre, Dance, and Performance Studies. He is a professional lighting designer for plays in D.C. and around the country. Watch this presentation.

Terminal Front, a collaborative effort funded by the University of Maryland, uses LiDAR and photogrammetry to create a virtual reality experience of the Helheim Glacier in Greenland. The project blends 4D LiDAR data sets shared by glaciologists with artist-led immersive media design, and the CyArk organization’s expertise in place-based digital storytelling.

Cy Keener is an assistant professor of art and an interdisciplinary artist who uses environmental sensing and kinetic sculpture to record and represent environmental phenomena. He also teaches Digital Media Theory and Culture in the Immersive Media Design program. Watch this presentation.

Adam Marton is the director of Capital News Service at the Philip Merrill College of Journalism. He is focused on quality storytelling across media, using design and technology to tell rich, human stories. Marton is a visual journalist and designer specializing in the presentation of the news, including data visualization, front-end development, and information graphics. Watch this presentation.

The high-density documentation of Cultural Heritage sites and artifacts is becoming more common as the cost of laser scanners and photogrammetry drop, and their processing becomes more user-friendly. Interpreting this material to the public is still a challenge. North Brentwood is a historic African American town just south of College Park.

A project is underway to document the built environment before gentrification permanently obscures it. VR presents an opportunity to immerse diverse publics across the globe into an interactive environment where they can engage with the history of this small town.

AR provides an opportunity to share historic documents, photographs and oral histories with any visitor that has a smartphone. Linking these two technologies to disseminate significant cultural heritage stories will encourage visitation to the town, and will enable new residents and visitors to learn what has made North Brentwood's history so special.

Stefan Woehlke is a Ph.D candidate in archaeology at the School of Architecture, Planning, and Preservation where he is focusing in heritage, diaspora theory and post-emancipation studies. He is the co-founder of a D.C. food tour company, where his passions intersect at food, history and culture. Watch this presentation.

Caroline Paganussi is an art historian because of classes she took during a study abroad semester in Bologna, home to Europe’s first university. The classroom was often the city itself, especially its churches. As the Robert H. Smith Fellow for Digital Art History, she is working with Gregory on approximating that experience through XR (AR within VR) for online teaching.

Ironically, the pandemic, for which such teaching is ideal, precluded gathering much of the necessary content and media this past summer. Nevertheless, they are prototyping the concept, and at the same time have pivoted to structuring the data of a period book about Bolognese art so that it can be mapped and ultimately experienced as an augmented walk through the city.

Caroline Paganussi is a Ph.D. candidate and Jenny Rhee Fellow studying Italian Renaissance Art in the Department of Art History and Archaeology. Broadly, her research focuses on the exploration and expression of humanistic discourse in painting and printmaking in the fifteenth and sixteenth centuries. Watch this presentation.

Virtual reality, augmented reality, and mixed reality represent emerging spatial technologies that are changing our relationship not just to each other, but to our environment as well. Helping humanity understand how best to use these technologies requires spatial storytellers and technologists who can collaborate together in these new mediums. As part of the Immersive Media Design program, we're bringing together a diverse group of students to explore the untapped narrative potential of spatial technologies.

Jonathan David Martin is a director, actor, producer and teaching artist focused on the creation and presentation of contemporary works of performance.He teaches the Immersive Media Design course Augmented Reality Design for Creatives and Coders. He is also the co-artistic director of Smoke & Mirrors Collaborative, a non-profit that creates original works for theater, VR/XR and the web with socially relevant themes. Watch this presentation.

Mollye Bendell is a studio arts lecturer and the first faculty member to teach Intro to Immersive Media Design at UMD. As a professional artist, she creates digital and analog sculptures using the intangible nature of electronic media as a metaphor for exploring vulnerability, visibility and longing in a world that can feel isolating. Watch this presentation.

Virtual reality technology promises the unique capability to create a sense of presence for users in virtual environments. This requires the computation of highly detailed and photo-realistic imagery in a matter of milliseconds, which is a challenge even for today's specialized high performance processors. Matthias Zwicker discusses how research and advances in computer graphics rendering algorithms are bringing us closer to achieving movie-quality rendering in interactive VR applications.

Matthias Zwicker is a professor and the chair of the Department of Computer Science, as well as a lead faculty member of the Immersive Media Design major. He develops high-quality rendering and signal processing techniques that are used for computer graphics, data-driven modeling, and animation. Watch this presentation.

In this panel, the MBRC team will discuss some of their most impactful ongoing projects, including a virtual reality tool for weather forecasting, an immersive concert, and a virtual module for developing surgical skills.

Barbara Brawn is the associate director of the Maryland Blended Reality Center, a lab that is dedicated to advancing visual computing for health care and innovative training for professionals in high-impact areas using AR and VR technologies. Brawn is followed by MBRC AR/VR researchers Jon Heagerty, Sida Li and Eric Lee. Watch this presentation.

Monifa Vaughn-Cooke is an assistant professor of mechanical engineering. Her interdisciplinary research aims to identify the behavioral mechanisms associated with system risk propagation to inform the design of user-centric products and systems, with the ultimate goal of improving productivity and safety. Watch this presentation.

The INTENT autism app will use mixed reality to develop an empathy tool and assess its efficacy to promote greater understanding, acceptance, and inclusion of people with autism and other neuro-divergent conditions by altering sensory input to the user in ways that mimic the perceptions of autistic individuals.

Kathryn Dow-Burger is a clinical associate professor in the Department of Hearing and Speech Sciences. Her interests focus on social communication disorders, language-learning disabilities, emergent literacy, and stuttering. She is the founder and program designer of UMD's Social Interaction Group Network for All (SIGNA).

Elizabeth Redcay is an associate professor in psychology and director of the Developmental Social Cognitive Neuroscience Lab. Her research examines the development and neural correlates of social interaction and social cognition in both typical and atypical development, specifically individuals with autism spectrum disorder.

Andrew Begel is a principal researcher in the Ability Group at Microsoft Research. His work looks at how technology and AI can play a role in extending the capabilities and enhancing the quality of life for people with disabilities. Watch this presentation.

I-Corps is a National Science Foundation program designed to foster, grow and nurture innovation ecosystems regionally and nationally. In this session, Kunitz will discuss how the program provides real world, hands-on training to researchers and early-stage technology entrepreneurs on how to successfully incorporate innovations into successful products to solve societal problems.

Daniel Kunitz represents the I-Corps program at the A. James Clark School of Engineering. He is the executive director of DC I-Corps Node, a role in which he advises and provides support to all early stage technology companies in the NSF-sponsored DC I-Corps program. Watch this presentation. Watch this presentation.

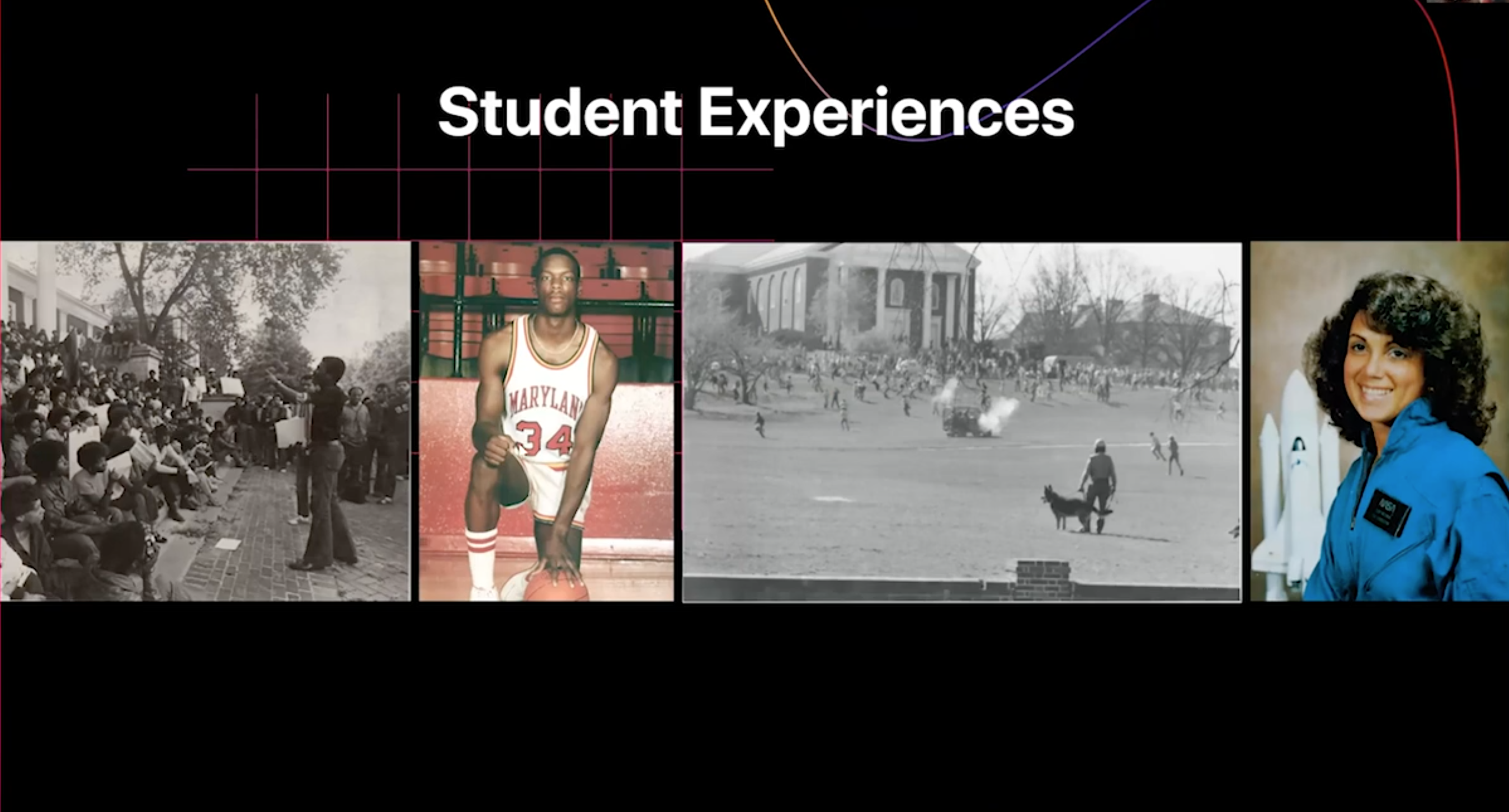

This project, originally created to give first-time UMD students an augmented reality tour of campus in the time of the pandemic, evolved into that and so much more. UMD has an extremely rich history–it is only through physically seeing the juxtaposition can we grasp how many generations of students and change it has taken to see what is standing before us.

Aishwarya Tare '22 is majoring in information science with a focus in human-centered design and a minor in art history. She is interested in rendering experiences using interaction design skills in AR/VR, human-computer interaction, and product design. Watch this presentation.

The XR Club is a student organization at UMD that provides students with resources and opportunities to explore virtual, augmented and mixed reality through hands-on tutorials, projects, hackathons, game nights, open lab hours, mentor office hours and more! This presentation will give an overview of the club and feature some of its recent projects, including the NASA SUITS challenge.

Sahil Mayenkar is a computer engineering major and the president of the XR Club. He previously taught the student initiated course CSMC388M Introduction to Mobile XR, and worked as an augmented reality developer intern at New Wave. Watch this presentation.

MAVRIC 2020 Conference

This two day virtual conference was held September 10th and 11th, 2020. It was co-hosted by the Chesapeake Digital Health Exchange. The 2020 conference explored ways that extended reality (VR/AR/MR) are impacting health care, business, art, intelligence, defense, and government.

MAVRIC 2019 Conference