Community

Faculty, Staff & Researchers

The University of Maryland XR and Immersive Media Community includes dozens of faculty, staff, and researchers who span many schools, colleges, departments, and programs across the campus. Get to know these individuals, their labs and centers, and some of their projects.

Labs & Researchers

The Applied Research Laboratory for Intelligence and Security (ARLIS), based at the University of Maryland College Park, was established in 2018 under the sponsorship of the Office of the Under Secretary of Defense for Intelligence and Security (OUSD(I&S)).

As a University-Affiliated Research Center (UARC), our purpose is to be a long-term strategic asset for research and development in artificial intelligence, information engineering, and human systems.

ARLIS builds robust analysis and trusted tools in the "human domain" through its dedicated multidisciplinary and interdisciplinary teams, grounded both in the technical state of the art and a direct understanding of the complex challenges faced by the defense security and intelligence enterprise.

Our outstanding research teams draw from a wide range of diverse expertise and disciplines, including engineering, data science, psychology, computer science, anthropology, rhetoric, cognitive science, political science, cybersecurity, linguistics, and artificial intelligence. These technical experts work with former and current defense and intelligence operators and policymakers to solve difficult national security problems, resulting in quality research that is relevant both to academia and our operational partners.

Research Projects

AI and VR to Augment Mission Planning

In 2019 and 2020, ARLIS worked with a team from the Army Futures Command C5ISR Center to develop a prototype distributed collaboration tool based on virtual reality (VR) technology. The prototype enables physically distributed commanders to plan and rehearse a mission around a virtual "sand table" as if they were physically co-located. The distributed planners collaborate around a virtual representation of the physical terrain, building plans using standard military symbology, and then rehearsing the scenarios through simulation, over-constrained communication channels.

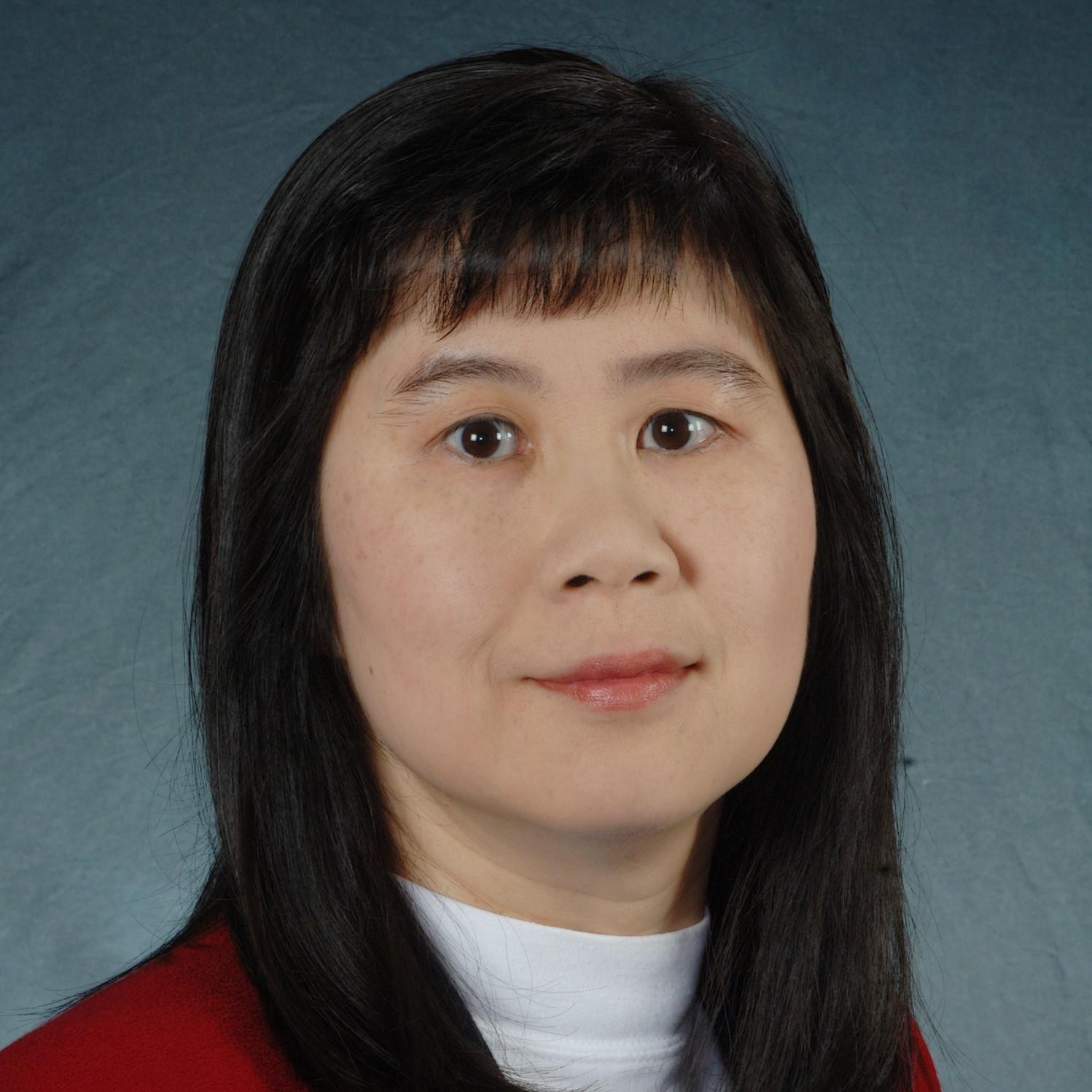

Victoria Chang

What does BRAVR do

Apply the techniques of neuroscience to discover physiological effects of built environments on human occupants.

Goal

Characterize and understand human experience in the built environment. Use established neuroscience methodologies to create empirical criteria for evaluating built environments’ impacts on health, safety, and welfare.

Vision

A world in which building design will respond to human experience. Designers working together with psychologists to design better offices for professionals, schools for children, and hospitals for patients.

Focus

- Developing techniques and platforms to apply neuroscience knowledge to built environment disciplines

- Creating criteria for measurement of human experience in the built environment

- Integrating self-reported experiences with cognitive neuroscience measures

- Cultivating knowledge leading to a better design process

Research Project

Sustainable Environment’s Impact

The goal of this research project is to develop, test, and validate a data-driven approach using virtual reality (VR) and electroencephalogram (EEG) technology for understanding the potential physiological influences of sustainable design features. We propose technology-enabled, repeatable measures for quantifying how Sustainable Building features affect occupancy emotion and cognitive functions – proxies for health and well-being.

Madlen Simon

Ming Hu, AIA, NCARB, LEED AP

Established in January 2021, the Brain and Behavior Institute (BBI) elevates and expands existing strengths in UMD neuroscience, advances the translation of basic science, recruits outstanding and diverse faculty, and establishes world-class core facilities.

The BBI is a centralized community of investigators who pursue bold, impactful research on the brain and behavior. By fostering excellence and innovation and promoting collaborations between neuroscience and engineering, computer science, mathematics, physical sciences, cognitive sciences and humanities, the BBI unites existing strengths at the University of Maryland and provides the platform for the elevation and expansion of campus neuroscience. We are administratively housed in CMNS and support the University of Maryland research enterprise.

Specific research foci targeted for growth and expansion include neuro-development and neuro-aging, the two temporal epochs most critical to the acquisition and maintenance of sensation, perception, cognition, mental health and physical health as well as most vulnerable to disruption by changes in the internal or external environment.

One area of focus is Artificial & Virtual Networks.

Research Projects

- Learning Age and Gender Adaptive Gait Motor Control-based Emotion Using Deep Neural Networks and Affective Modeling: Detecting and classifying human emotion is one of the most challenging problems at the confluence of psychology, affective computing, kinesiology, and data science. This research examines the role of age and gender on gait-based emotion recognition using deep learning.

- Project Dost (named for the Hindi word for “friend”) combines data about gait, gesture, facial expression and speech intonation to generate the assistant that can interact in culturally sensitive contexts by perceiving, interpreting and responding to your emotions.

- Recording Brain Waves to Measure ‘Cybersickness’ - In a first-of-its kind study, researchers at the University of Maryland recorded VR users’ brain activity using electroencephalography (EEG) to better understand and work toward solutions to cybersickness. The research conducted by computer science alum Eric Krokos '13, M.S. '15, Ph.D ’18, and Amitabh Varshney, a professor of computer science and dean of the College of Computer, Mathematical, and Natural Sciences was published recently in the journal Virtual Reality.

- Find more research projects here.

Dinesh Manocha

Dr. Aniket Bera

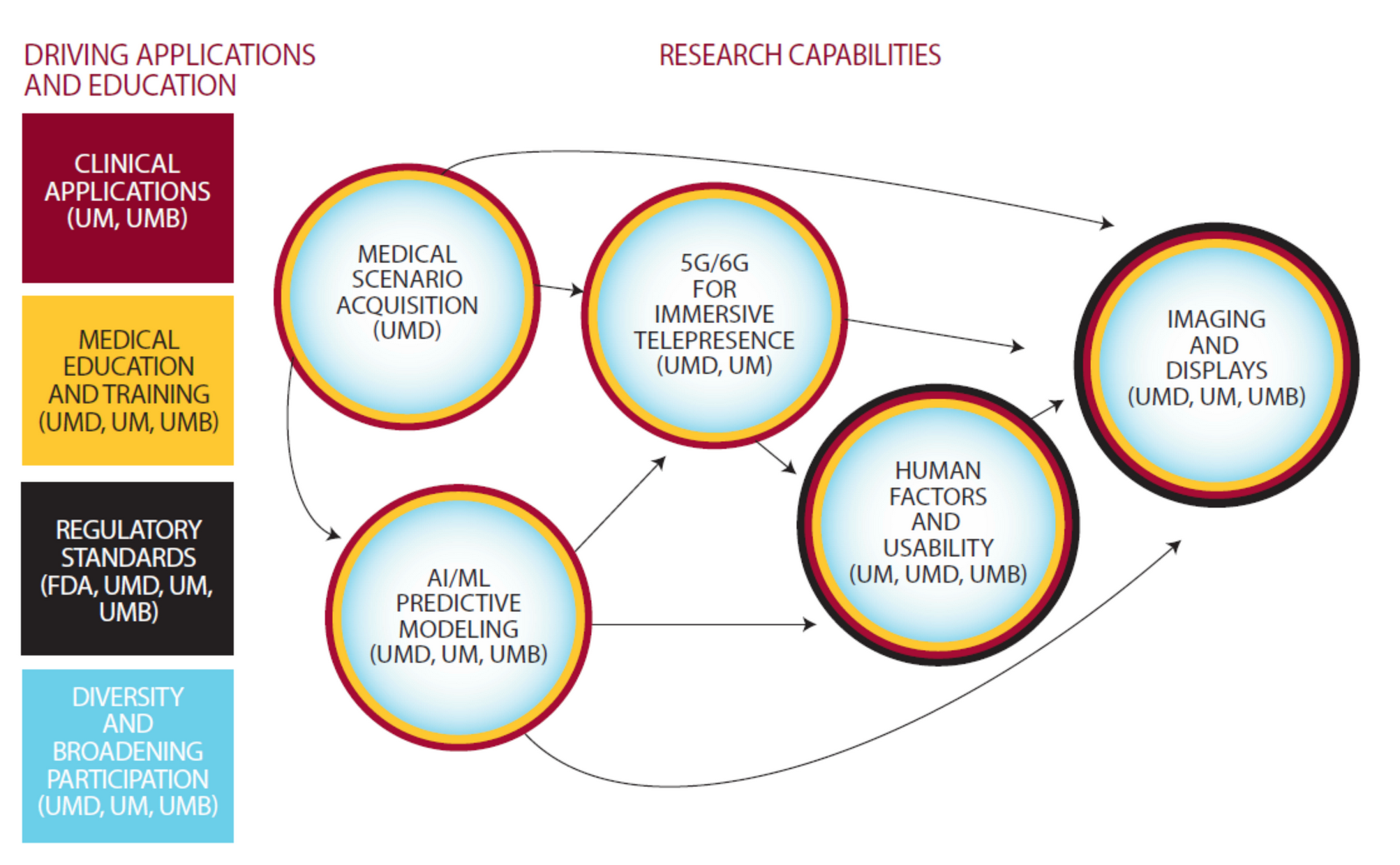

The Center for Medical Innovation in Extended Reality (MIXR)’s driving applications are clinical practice, medical education and training, and regulatory standards. We have unique capabilities in scene acquisition, predictive modeling, immersive telepresence, human factors, and imaging and displays. Each of these elements additionally offers opportunities for broadening engagement and participation.

MIXR will provide the scientific foundation in support of regulatory requirements and decisions regarding XR devices; our focus will be on current gaps and evaluation challenges across a range of clinical specialties and various XR hardware and software platforms.

Research Capabilities & Projects

Dean Amitabh Varshney

Dr. Sarah Murthi

Barbara Brawn

The Extended Reality Flight Simulation and Control Lab combines (1) virtual and augmented reality (VR/AR), (2) wearable devices such as full-body haptic feedback and biometry tracking suits, and (3) motion-base systems to create immersive, Extended Reality (XR) piloted flight simulations. Research topics include human-machine interaction, innovative pilot cueing methods, and advanced flight control laws.

Dr. Umberto Saetti

The GAMMA research group was formed in 1995 and conducts research in the design and implementation of algebraic, geometric and scientific algorithms, and their applications to computer graphics, robotics, virtual environments, CAD/CAM, acoustics, pedestrian dynamics and medical simulation.

In addition to publishing papers at the leading venues, GAMMA has a long history of developing software packages and transitioning technology into industrial products, with more than 60 licenses to major corporations.

Metaverse Research Projects at GAMMA

- Project Dost - AI-driven virtual conversational assistant. Dost's gestures, facial expressions, and speech are generated in real-time using state-of-the-art research in affective computing, emotional modeling, character and speech generation, and virtual reality. Dost combines our generative ork generation work to create realistic interactive avatars designed to have conversations and behave like real humans, not only in terms of content but also in emotional and behavioral expressiveness. We are also using Dost to create Virtual Therapists to bride the demand-supply gap in telemental health.

- Affective Virtual Agents - As the world increasingly uses digital and virtual platforms for everyday communication and interactions, there is a heightened need to create highly realistic virtual agents endowed with social and emotional intelligence. Interactions between humans and virtual agents are being used to augment traditional human-human interactions in different Metaverse applications. Human-human interactions rely heavily on a combination of verbal communications (the text), inter-personal relationships between the people involved (the context), and more subtle non-verbal face and body expressions during communication (the subtext). While context is often established at the beginning of interactions, virtual agents in social VR applications need to align their text with their subtext throughout the interaction, thereby improving the human users’ sense of presence in the virtual environment. Affective gesticulation and gaits are an integral component in subtext, where humans use patterns of movement for hands, arms, heads, and torsos to convey a wide range of intent, behaviors, and emotions.

- Locomotion Interfaces for VR - Exploring virtual environments/Metaverse is an integral part of immersive virtual experiences. Real walking is known to provide benefits to sense of presence and task performance that other locomotion interfaces cannot provide. Using an intuitive locomotion interface like real walking has benefits to all virtual experiences for which travel is crucial, such as virtual house tours and training applications. Our work on Redirected walking (RDW) as a locomotion interface allows users to naturally explore VEs that are larger than or different from the physical tracked space, while minimizing how often the user collides with obstacles in the physical environment.

- Audio Simulation - In recent years, there has been a renewed interest in sound rendering for interactive Metaverse/XR applications. Our group has been working on novel algorithms for sound synthesis, as well as geometric and numeric approaches for sound propagation.

- User-Centric Social Experiences - We developed novel approaches for creating user-centric social experiences in virtual environments that are populated with both user controlled avatars, and intelligent virtual agents. We propose algorithms to increase the motion, and behavioral realism of the virtual agents, thus creating immersive virtual experiences. Agents are capable of finding collision-free paths in complex environments, and interacting with the avatars using natural language processing and generation, as well as non-verbal behaviours such as gazing, gesturing, facial expressions etc. We also built a multi-agent simulation framework that can generate pausible behaviors and full body motion for hundreds of agents at interactive rates.

Dr. Ming C Lin

Dinesh Manocha

The Human-Computer Interaction lab (HCIL) has a long, rich history of transforming the experience people have with new technologies. From understanding user needs, to developing and evaluating those technologies, the lab’s faculty, staff, and students have been leading the way in HCI research and teaching.

We believe it is critical to understand how the needs and dreams of people can be reflected in our future technologies. To this end, the HCIL develops advanced user interfaces and design methodology. Our primary activities include collaborative research, publication, and the sponsorship of open houses, workshops and symposiums.

We are jointly supported by the College of Information Studies (iSchool) and the University of Maryland Institute for Advanced Computer Studies (UMIACS). Our faculty come from a variety of departments across campus including computer science, education, psychology, engineering, and the humanities. We are brought together by a core focus on understanding how to design technologies to support positive impact in the world.

Research Projects

- StreamBED VR is a a virtual reality training tool that teaches qualitative stream monitoring to citizen scientists. The goal of the system is to create an experience that guides novice water monitors to focus on key areas of the stream and so that they understand and evaluate streams relative to other similar streams spaces using visual, auditory and other sensory cues. This work contributes to research in the domains of citizen science, VR education, and multisensory design.

- Find many more research projects here.

Evan Golub

Huaishu Peng

Lab for Applied Social Science Research (LASSR) focuses on a series of critical issues of public concern including policing, community relations, health disparities, and education inequality. LASSR aims to partner and collaborate with government entities, organizations, and businesses to better address these issues. LASSR was developed after a group of social scientists realized that the innovative research being conducting inside of universities was limited in its ability to extend outside of universities. LASSR aims to better collaborate with policy makers and community members to bridge the gap between academics and non-academics. LASSR’s core belief is that scholarly research can simultaneously be rigorous and applied directly to impacting policy and community.

A central goal of LASSR is to become a key point of contact within the academic community for policy makers, business leaders, and organizations wanting to collaborate and conduct research on a pressing issue of public concern. LASSR aims to be the premier research laboratory for the Baltimore/Washington DC Corridor.

Research Projects

LASSR Virtual Reality Policing Simulator Program - The Lab for Applied Social Science Research (LASSR) in collaboration with the University of Maryland Institute for Advanced Computer Studies (UMIACS) and corporate sponsorships has developed a cutting-edge virtual reality program that merges social science with computer science. Housed in the College of Behavioral and Social Sciences (BSOS) and the Department of Sociology, this program provides a platform to evaluate decision-making in an immersive virtual reality environment. We have the ability to create tactical and social simulations that can be used to test decisions. We are willing to fly and/or host small groups of officers to the University of Maryland to test their performance in this simulator pre- and post-training. Because of an existing grant, these costs will be covered by LASSR.

Dr. Rashawn Ray

The Maryland Blended Reality Center (MBRC) is a multidisciplinary partnership that joins computing experts at the University of Maryland with medical professionals at the University of Maryland, Baltimore. The center’s mission is to advance visual computing tools—many of them based in immersive technologies like virtual, augmented and mixed reality—that can be used for emergency medicine, health care and innovative educational and training modules. Launched in 2017, MBRC was initially funded by the University of Maryland Strategic Partnership: MPowering the State. MBRC is also partnering with the federal government, other academic institutions and industry leaders to develop a variety of mixed reality applications.

Research Projects

- Augmented Reality for External Ventricular Drainage

Collaborators: Greg Schwartzbauer, R Adams Cowley Shock Trauma Center

External ventricular drainage (EVD) is a high-risk procedure that involves inserting a catheter inside a patient’s skull to drain cerebrospinal fluid and relieve intracranial pressure. To assist with this procedure, we have developed the Augmented Reality Catheter Tracking and Visualization Methods and System, which accurately projects both a catheter placed into the human brain through the skull, and a brain CT scan overlaid on the skull, onto AR glasses. Our technique uses a new linear marker detection method that requires minimal changes to the catheter and is well-suited for tracking other thin medical devices that require high-precision tracking.

- COVID-19 Patient Data Visualization Tools

Collaborators: R Adams Cowley Shock Trauma Center

Point-of-care ultrasound (POCUS) is ideal for lung and cardiac imaging in patients with COVID-19, as it is a rapid and lower-risk imaging option providing important anatomic and functional information in real time. We are developing new computational tools that will store, analyze and display POCUS images quickly, accurately, and in a way designed to facilitate clinical decision-making. The technology will allow quantification and standardization of lung and cardiac ultrasound findings in COVID-19, which is not currently possible. While this effort is COVID-19- specific, the technology will impact and improve the care of all acutely ill patients, fundamentally changing how patients are managed both in and out of the hospital setting.

- Four Strings Around the Virtual World

Collaborators: Irina Muresanu, UMD School of Music

We’ve spearheaded a unique collaboration between the University of Maryland’s School of Music and College of Computer, Mathematical, and Natural Sciences, combining classical music with modern technology to transform the way people experience a concert performance. The Maryland Blended Reality Center built a prototype VR system to capture pieces from world-renowned concert violinist Irina Muresanu’s “Four Strings Around the World” project, a series of solo violin pieces representing traditional music from the Middle East, South America, Europe, China and the United States. The resulting experience transports viewers to scenic locations around the world, which represent these culturally-diverse pieces as Muresanu performs them.

- Weather Forecasting in Virtual Reality

Collaborators: Mason Quick, Patrick Meyers, Scott Rudlosky, ESSIC

Immersive visualization technology is rapidly changing the way three-dimensional data is displayed and interpreted. Rendering meteorological data in four dimensions (space and time) exploits the full data volume and provides weather forecasters with a complete representation of the time-evolving atmosphere. With the National Oceanic and Atmospheric Administration, we created an interactive immersive “fly-through” of real weather data. Users are visually guided through satellite observations of an event that caused heavy precipitation, flooding, and landslides in the western United States. This narrative and display highlights how VR tools can supplement traditional forecasting and enhance meteorologists’ ability to predict weather events.

- Find many more research projects here

Dean Amitabh Varshney

Dr. Sarah Murthi

Barbara Brawn

MITH is the home of an interdisciplinary group of researchers who collaboratively advance the study of cultural heritage and arts using computational technologies while also training the insights and approaches of the humanities on the computational technologies that shape our world.

As a center within the College of Arts and Humanities at the University of Maryland, College Park, MITH has served as a world-class concentration of expertise for more than 20 years. We also teach courses and hosts events for campus and public communities in support of our core research mission.

You might know us through our work on the African American History, Culture and Digital Humanities (AADHum) Initiative, the Documenting the Now project, the Shelley-Godwin Archive, or the other work of one of our faculty and staff members.

Descriptions of MITH Research Projects can be found here.

Jason Farman

Cassandra Hradil

Jeffrey Moro

Andrew W Smith

The Michelle Smith Collaboratory for Visual Culture is a learning space in the Department of Art History and Archaeology made possible by the generosity of the Robert H. Smith family.

The Collaboratory fosters an environment of curious and resourceful inquiry into the digital humanities, specifically digital art history, to support student and faculty research and teaching, both within the department and throughout the university community.

As fellows and interns, graduate and undergraduate students form the core of our Collaboratory community and thrive in a space of expression and iterative, hands-on learning. Our ethos of outreach, support and co-creation of innovative projects and processes is forged with partners both at the University of Maryland and in communities beyond campus borders, including area museums such as The Phillips Collection and the Riversdale House Museum.

The staff of the Collaboratory, whose research and expertise extends into many aspects of both art history and archaeology, is committed to creating a welcoming, supportive, and equitable space for all, a place where we embrace the process of discovery and experimentation.

Research Projects

- Photogrammetry @ Riversdale: Two students in the Collaboratory, Charline Fournier-Petit (Art History) and Kenna Hernly (Education), working with Chris Cloke and Quint Gregory, photographed objects in the Riversdale House Museum collection and modeled these objects in Agisoft’s Photoscan photogrammetry software as 3D virtual objects.

- Augmenting Visitor’s Center @ Riversdale: Alex Cave, undergraduate intern at Riversdale fall 2018, developed an augmented reality scavenger hunt for kids visiting the Visitor’s Center at Riversdale House Museum. Unfortunately, this brilliant project’s life was short, as HPReveal retired on July 1, 2019, the platform on which Alex created the experience. A glimpse of the app in action can be spotted in the In Frame video series of Alex’s work at Riversdale.

- Accessibility @ Riversdale: Using a 360 degree camera, Alex Cave created a tour of the second-floor spaces (and more) that are inaccessible to visitors unable to climb stairs in the historic home of Riversdale.

- Study Collection VR @ Collaboratory: Two undergraduate interns, Josh Batugo and Sarah-Leah Thompson, worked with Quint Gregory and Chris Cloke to learn about the Department’s Study Collection and to consider best methods to better preserve and display these objects than presently is the case. There work led to the creation of a new case design rendered in Maya and accessible in a Unity environment of the Department’s fourth floor, where virtual objects from the collection, created through photogrammetry, could be curated in the cabinet. This project represents a beginning onto which students (undergraduate and graduate) will build.

- Find more research projects here.

Located within the Maryland Institute for Technology in the Humanities (MITH), NarraSpace invites scholars and creators—especially BIPOC and queer voices—to explore deeply personal, often indescribable experiences. With tools like virtual reality headsets, spatial reality displays and gaming equipment, the lab is helping scholars push the boundaries of what storytelling—and scholarship—can be.

Projects:

- Elizabeth “Lisa” Abena Osei, a doctoral student in English whose research focuses on Black speculative fiction, specifically Afrofuturism and Africanfuturism, is a graduate researcher at NarraSpace. After learning the open-source software Twine through mentorship with Parham, Osei is designing a game that immerses players in Afrofuturist and Africanfuturist worlds, allowing them to experience unique cultures, technologies and narratives.

- Christin Washington, a doctoral candidate in American studies, is using “speculative mapping” to look at Caribbean women’s lives in the context of mourning, space and digital infrastructure; and Alice Bi, a graduate student in English, combines the study of poetic form in African American and Asian American texts with touch technologies like interactive projection.

Cassandra Hradil

The SMART Lab advances interactive technologies by designing, prototyping, and evaluating novel artifacts that are personal, hands-on, and often small when it comes to the form factors.

Our interests span the methods of building these personal artifacts (through design and interactive fabrication), the scenarios in which they're used (in virtual and augmented reality), and the users who can benefit from them (via assistive and enabling technology).

Huaishu Peng

The University of Maryland Autism Research Consortium (UMARC) is an interdisciplinary group of researchers, clinicians, and service providers in the Departments of Hearing and Speech Sciences, Psychology, Human Development, Linguistics, Kinesiology, Special Education, Career Center EmployABILITY program, and MAVRIC at the University of Maryland in College Park and, the University of Maryland Schools of Medicine and Social Work in Baltimore.

We seek to advance the understanding of Autism Spectrum Disorders (ASD) in children and adults, and to contribute to the development of effective treatments and interventions. Our research examines the social, cognitive, linguistic, and neural underpinnings of autism. We also host professional training and the Community-wide Learning about Autism Speaker Series: UMARC CLASS has transitioned to a podcast format rather than in-person events to make attending talks easier for families, students, and professionals in the community and throughout the world!

Research Project

Project Intent

Kathy Dow-Burger, M.A., CCC-SLP

Elizabeth Redcay, Ph.D.

Programs & Educators

The new Arts for All initiative partners the arts with the sciences, technology and other disciplines to develop new and reimagined curricular and experiential offerings that nurture different ways of thinking to spark dialogue, understanding, problem solving and action. It bolsters a campus-wide culture of creativity and innovation, making Maryland a national leader in leveraging the combined power of the arts, technology and social justice to collaboratively address grand challenges.

Arts Improve the Student Experience

We are prepared to address the growing student and industry demand to integrate the arts into student life, both within and beyond the curriculum, helping students access and amplify their creative talents and fostering collaborative and innovative thinking to solve problems.

Arts Create an Inclusive Environment

We are harnessing the power of the arts to spark civic dialogue, increase community engagement and activate social change.

Arts Advance the University

We are accelerating innovation, discovery and insights through collaborations among the arts, the humanities and the sciences through research, creative activity and technological innovation.

Adriane Fang

Design Cultures & Creativity explores the roles and impact of design in our societies and creative practices; we are theorists and thinkers who investigate the digital age through designing, analyzing, and making.

Design Cultures & Creativity fosters an open, collaborative, and social environment that encourages students to explore relationships between design, society, and creative practices. We are passionate about emerging technologies and their impact on the world, and even more importantly, how our designs can change the world. DCC students are innovative thinkers and makers who engage in research and collaborative projects on topics as varied as identity, connectivity, social justice, art, design, and all things creative in an era when digital media link us on a scale unprecedented in human history. DCC encourages students to think beyond disciplinary boundaries and approach problems from multiple perspectives by providing them with the resources to tackle any issue or goal.

Our courses, lab facilities, and workshops provide spaces for exploration, thinking through ideas, and experimenting with processes of building, designing, and creating in the digital age. DCC strongly values inclusivity and aims to cultivate a community of life-long learners who are critically engaged thinkers. Our students are the future’s makers and doers, able to expand our notions of human potential, not merely technologically but also socially and creatively.

Alexis Lothian

Immersive Media Design is the only undergraduate program in the country that synthesizes art with computer science to develop immersive media. You’ll learn how to code, create and collaborate using the latest digital tools and technologies. Code with our distinguished computer science faculty who excel in designing algorithms that improve the detail and speed of immersive displays. Create with our renowned art faculty who push the boundaries of expression and technology in new and inventive ways. Collaborate in teams to produce imaginative, innovative, and interactive experiences. Select art or computer science as the focus of your coursework to graduate with a B.A. from the College of Arts and Humanities or a B.S. from the College of Computer, Mathematical, and Natural Sciences.

Learn more about the various research projects that IMD faculty and students have been working on.

Dean Amitabh Varshney

Evan Golub

Huaishu Peng

Mathematics Professor Emeritus Michael and Eugenia Brin and the Brin Family Foundation are establishing the Maya Brin Institute for New Performance, which will add courses, expand research and fund new teaching positions, undergraduate scholarships, classroom and studio renovations, and instructional technology. Their support will boldly reimagine the future of education in the performing arts.

Leaders in the College of Arts and Humanities, where the school is housed, say the institute will advance the School of Theatre, Dance, and Performance Studies (TDPS) role as an innovator in design and performance, and prepare graduates to launch careers in emerging media formats such as webcasts, immersive design technology and virtual reality performance.

The Brins, parents to Google co-founder Sergey ’93 and Samuel ’09, have previously made several significant gifts to support the university’s computer science and math departments and Russian and dance programs, the latter two to honor Michael’s late mother, Maya. She emigrated with her family from the Soviet Union in 1979 and taught in UMD’s Russian program for nearly a decade. She also loved the performing arts, a love she tried to instill in her children and grandchildren by taking them to the ballet and theater, said Michael Brin.

The idea of combining the arts and technology inspired this new gift. “I want to … open opportunities to the students and faculty in interactions between new media and traditional art,” said Brin, who retired from UMD in 2011 after 31 years on its faculty.

As the COVID-19 pandemic continues, halting most live performances for nearly a year, theaters and concert venues have sought to find creative ways to present plays, dances and musical performances over a screen.

The Maya Brin Institute is “giving us the opportunity not only to experiment with new technology, but to innovate new processes to create performance,” said Jared Mezzocchi, associate professor of dance and theatre design and production. “This is a glorious opportunity for our school to reach its goals as part of a Research I institution: taking what we have explored technologically throughout the pandemic, and launching us into a future of accessible, immersive, interactive and multidisciplinary performance.”

A new light and technology studio and multimedia labs and an upgraded dance studio in the university’s Clarice Smith Performing Arts Center will provide creative space for five additional faculty positions in lighting design for camera, live digital performance, technology and multimedia production, and other fields. Full-stage green screens, GoPro cameras, laser projectors and remote rehearsal technology will broaden performing options. Future classes will include “Video Design for Dance and Theater” and “Experimental Interfaces and Physical Computations.”

TDPS, home to approximately 250 students, has long served as a pipeline of talent for the thriving Washington, D.C., theater and dance community, including the Kennedy Center, Arena Stage and Dance Place; 19 Terps were nominated for the regional Helen Hayes Awards last year.

The school combines experience on the professional-quality stages and rehearsal spaces in The Clarice with teaching from nationally recognized faculty (such as five-time Tony winner for Best Lighting, Brian MacDevitt) in the context of a liberal arts education incorporating arts, society, science and technology.

To Maura Keefe, TDPS director and associate professor of dance performance and scholarship, the institute will allow the school to propel students to the forefront of the field by focusing on what performance is about: creativity and exploration.

Andrew Cissna

The Mixed/Augmented/Virtual/Reality Community (MAVRIC) is an initiative that supports scholarship, interdisciplinary research and transformational applications of XR at the University of Maryland.

MAVRIC's mission is to catalyze the research of XR across the campus with an emphasis on projects that leverage the technology for bettering humanity.

Along with promoting the research of XR, MAVRIC also strives to:

- foster a robust, inclusive, and diverse XR community and talent pipeline;

- enable the next generation of students, researchers, and inventors to excel in creativity and innovation using the latest digital tools and technologies;

- empower a socially-focused campus and global network of XR researchers, developers, entrepreneurs, innovators, creators, funders, and policy makers.

Located on the first floor of the STEM Library, the Makerspace is home to many kinds of equipment including the Augmented Reality (AR) Sandbox.

The Augmented Reality Sandbox (ARSandbox) is an interactive box of sand connected to an XBox Kinect and projector. Faculty and students can use it for a variety of activities including modeling topographic maps, exploring hydrologic systems, and demonstrating geomorphology principles.

We strongly encourage the use of the Sandbox for Research and Instruction.

Schools, Colleges & Faculty

College of Agriculture & Natural Resources (AGNR)

Dr. Christopher D. Ellis

School of Architecture, Planning & Preservation (ARCH)

Madlen Simon

Ming Hu, AIA, NCARB, LEED AP

College of Arts & Humanities (ARHU)

Andrew Cissna

Adriane Fang

Jason Farman

Cassandra Hradil

Alexis Lothian

Jeffrey Moro

Richard Scerbo

Juan Uriagereka

College of Behavioral and Social Sciences (BSOS)

Kathy Dow-Burger, M.A., CCC-SLP

George Hambrecht

Dr. Rashawn Ray

Elizabeth Redcay, Ph.D.

College of Computer, Mathematical & Natural Sciences (CMNS)

Dr. Amitabh Varshney

Dr. Matthias Zwicker

Dr. Ming C Lin

Dr. Aniket Bera

Larry S. Davis

Ramani Duraiswami

Dinesh Manocha

College of Education (EDUC)

Helene Cohen

Gulnoza Yakubova, Ph.D

A. James Clark School of Engineering (ENGR)

Dr. Umberto Saetti

Dr. Min Wu

College of Information

Jason Farman

Jonathan Lazar

Andrew W Smith

Philip Merrill College of Journalism (JOUR)

Josh Davidsburg

Robert H. Smith School of Business (BMGT)